Clustering Algorithms in Machine Learning

Explore the most popular clustering algorithms in machine learning, their types, applications, and how to choose the right one for your data. Learn key concepts to master unsupervised learning and boost your AI skills.

Clustering is one of the most powerful unsupervised learning techniques in machine learning, used to uncover hidden patterns and group similar data points without predefined labels. It plays a crucial role in various domains such as customer segmentation, image recognition, anomaly detection, and data compression. Understanding clustering algorithms in machine learning helps data professionals extract meaningful insights and make data-driven decisions more effectively.

In this article, we’ll explore what clustering is, the types of clustering methods, popular clustering algorithms, how to choose the right one for your project, and the top tools used for implementing clustering in real-world applications.

What is Clustering in Machine Learning?

Clustering in machine learning is the process of organizing data points into groups called clusters such that items within the same cluster are more similar to each other than to those in other clusters. It’s an unsupervised learning technique, meaning the algorithm learns patterns directly from the data without any labeled outcomes.

For example, a business might use clustering to segment customers based on purchasing behavior, or a biologist might group species based on genetic similarity. The main goal is to identify natural structures within data to enhance understanding and decision-making.

Key characteristics of clustering:

- No labeled data is required.

- Groups (clusters) are formed automatically based on similarities or distances.

- Useful for pattern discovery, data summarization, and anomaly detection.

Read these below articles:

- Bias-Variance Tradeoff in Machine Learning

- What is Epoch in Machine Learning?

- What is Multiple Linear Regression in Machine Learning?

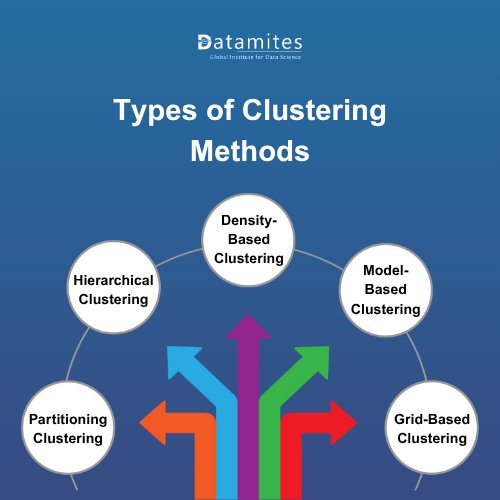

Types of Clustering Methods

Different types of clustering methods are designed to handle specific kinds of data structures and applications. Here are the most commonly used clustering approaches in machine learning:

1. Partitioning Clustering

This method divides the dataset into distinct, non-overlapping clusters. Each data point belongs to exactly one cluster.

Example: K-Means Algorithm.

2. Hierarchical Clustering

Hierarchical clustering builds a tree-like structure (dendrogram) that represents nested clusters. It can be agglomerative (bottom-up) or divisive (top-down).

Example: Agglomerative Hierarchical Clustering.

3. Density-Based Clustering

Clusters are formed based on areas of high data density separated by low-density regions. It’s ideal for identifying irregularly shaped clusters.

Example: DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

4. Model-Based Clustering

This approach assumes that data is generated from a mixture of underlying probability distributions and tries to estimate their parameters.

Example: Gaussian Mixture Models (GMM).

5. Grid-Based Clustering

In this method, the data space is divided into a finite number of cells to form a grid structure, and clustering is performed on the grid instead of raw data points.

Example: STING (Statistical Information Grid).

Popular Clustering Algorithms in Machine Learning

Now that you know the basic types, let’s look at the most widely used clustering algorithms in machine learning and how they work.

1. K-Means Clustering

K-Means is one of the simplest and most popular clustering algorithms. It works by partitioning data into K clusters based on feature similarity.

How it works:

- Choose the number of clusters (K).

- Randomly select K centroids.

- Assign each data point to the nearest centroid.

- Recalculate the centroids based on the mean of points in each cluster.

- Repeat until the centroids stop moving significantly.

Advantages:

- Fast and efficient on large datasets.

- Easy to implement and interpret.

Limitations:

- Requires specifying the number of clusters in advance.

- Struggles with irregularly shaped or overlapping clusters.

2. Hierarchical Clustering

Hierarchical clustering builds nested clusters using a bottom-up (agglomerative) or top-down (divisive) approach.

Agglomerative clustering starts by treating each point as an individual cluster and merges them step by step based on similarity until all points are grouped into one big cluster.

Divisive clustering, on the other hand, starts with a single large cluster and splits it recursively into smaller groups.

Key advantage:

- No need to specify the number of clusters beforehand.

- The dendrogram visualization provides a clear picture of relationships between clusters.

Limitation:

- Computationally expensive for large datasets.

3. DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

DBSCAN groups together closely packed points and marks isolated points as outliers.

How it works:

- It defines clusters as dense regions separated by areas of low density.

- Requires two parameters: ε (epsilon) the neighborhood radius, and MinPts the minimum number of points required to form a cluster.

Advantages:

- Detects clusters of arbitrary shapes.

- Handles noise and outliers well.

Limitation:

- Performance is sensitive to parameter selection.

4. Mean Shift Clustering

Mean Shift is a powerful non-parametric clustering algorithm that does not require specifying the number of clusters in advance.

It works by sliding a window over the data points and shifting its center towards the densest region until convergence.

Advantages:

- Automatically determines the number of clusters.

- Works well for complex data distributions.

Limitation:

- Computationally expensive for large datasets.

5. Gaussian Mixture Models (GMM)

A Gaussian Mixture Model assumes that the data is composed of multiple Gaussian distributions. It uses the Expectation-Maximization (EM) algorithm to estimate parameters and assign probabilities for each point to belong to a cluster.

Advantages:

- Provides soft clustering (a data point can belong to multiple clusters with certain probabilities).

- Suitable for complex datasets with overlapping clusters.

Limitation:

- Requires specifying the number of clusters.

According to Statista The market for artificial intelligence grew beyond billion U.S. dollars in 2025, a considerable jump of nearly billion compared to 2023. This staggering growth is expected to continue, with the market racing past the trillion U.S. dollar mark in 2031.

Read these below articles:

How to Choose the Right Clustering Algorithm

Choosing the best clustering algorithm depends on your data characteristics, project goals, and computational resources. Here’s a quick guide:

| Criterion | Recommended Algorithm |

| Large datasets with well-separated clusters | K-Means |

| Non-linear or arbitrary-shaped clusters | DBSCAN or Mean-Shift |

| Hierarchical data structure | Hierarchical Clustering |

| Probabilistic modeling required | Gaussian Mixture Model |

| High-dimensional data | K-Means with PCA preprocessing |

Tools and Libraries for Implementing Clustering

Several powerful tools and libraries simplify clustering implementation in machine learning:

- Scikit-learn (Python): Offers built-in functions for K-Means, DBSCAN, Agglomerative, and GMM clustering.

- TensorFlow and PyTorch: Used for deep clustering and neural-based methods.

- R (stats and cluster packages): Ideal for statistical and exploratory clustering.

- MATLAB: Provides robust built-in clustering functions and visualization tools.

- RapidMiner & Weka: No-code/low-code platforms for quick clustering experiments.

The global natural language processing market size accounted for USD 30.68 billion in 2024 and is predicted to increase from USD 42.47 billion in 2025 to approximately USD 791.16 billion by 2034, expanding at a CAGR of 38.40% from 2025 to 2034. (Precedence Research)

Clustering algorithms in machine learning are essential for discovering hidden structures in data and deriving actionable insights. From K-Means and DBSCAN to Hierarchical and GMM approaches, each algorithm offers unique strengths tailored to specific data scenarios. By understanding their differences, advantages, and ideal use cases, you can make informed decisions to improve model performance and data understanding.

Machine Learning has emerged as one of the most in-demand skills across industries. From powering recommendation engines and enabling autonomous vehicles to driving intelligent business insights, machine learning is transforming the way the world operates. An online machine learning courses provides a strong foundation in understanding algorithms, data processing, model building, and real-world applications. It is designed for both beginners and professionals who want to upskill and stay competitive in the fast-evolving field of artificial intelligence.

For anyone looking to build a strong foundation in artificial intelligence, the DataMites Online Artificial Intelligence courses are an excellent choice. These programs cover core AI concepts, including machine learning, deep learning, neural networks, natural language processing, and computer vision. Upon completion, participants receive globally recognized certifications from IABAC and NASSCOM FutureSkills, significantly boosting their career prospects and providing a competitive edge in the rapidly growing AI job market.