Data Preprocessing in Machine Learning

Discover the importance of data preprocessing in machine learning. Learn key steps, techniques, and best practices to clean, transform, and prepare raw data for accurate and efficient AI models.

Machine learning models rely heavily on high-quality data to deliver accurate results. However, raw datasets are often incomplete, inconsistent, or filled with noise, making them unsuitable for direct use in training algorithms. This is where data preprocessing in machine learning comes into play. It is a crucial step in the machine learning pipeline that involves cleaning, transforming, and preparing data so that algorithms can effectively extract patterns and insights. In fact, the success of any machine learning project depends more on the quality of data preprocessing than on the choice of algorithm.

Why Data Preprocessing is Important

Raw data often contains missing values, duplicates, outliers, and inconsistencies. Using such data directly can lead to poor predictions and unreliable outcomes. Preprocessing solves this by ensuring:

- Improved Accuracy: Cleaning and transforming data removes noise and irrelevant features, helping algorithms capture genuine patterns. For example, imputing missing values prevents gaps that might confuse the model.

- Consistency: Data from multiple sources may differ in format, scale, or type. Preprocessing standardizes variables, ensuring uniformity and making it easier for algorithms to treat features fairly.

- Efficiency: Raw data with high dimensionality or redundancy slows down training. Techniques like feature selection, scaling, and dimensionality reduction make training faster and more resource-efficient.

- Robustness: Outliers, noise, and imbalances can cause models to overfit. Preprocessing methods such as outlier handling and class balancing improve model generalization and reliability on unseen data.

In essence, data preprocessing forms the backbone of effective machine learning by converting raw datasets into structured, meaningful inputs. Similarly, across industries worldwide, over 1.7 million industrial robots are already in operation, showcasing how well-prepared systems can deliver efficiency and accuracy at scale.

Steps in Data Preprocessing in Machine Learning

Data preprocessing is not a single action but a systematic, multi-step process designed to prepare raw data for machine learning models. The exact steps may vary depending on the dataset and the problem at hand, but the following stages are commonly involved:

1. Data Cleaning

Real-world datasets often contain inconsistencies, errors, or incomplete records. Data cleaning ensures that these issues are addressed so the model can learn from reliable input. Key tasks include:

- Handling missing values: Missing entries can be filled using imputation techniques such as mean, median, mode substitution, or more advanced methods like k-NN imputation. In some cases, rows or columns with excessive missing data may be removed.

- Removing duplicates: Duplicate records can bias the model by giving certain data points more weight than they deserve. Identifying and removing duplicates improves dataset quality.

- Correcting inconsistencies: Different formats (e.g., “Male/Female” vs. “M/F”) or units (e.g., dollars vs. euros) must be standardized.

- Addressing outliers: Outliers can distort training, especially in algorithms sensitive to scale (like regression). Techniques such as z-score, IQR, or domain-based rules help in detecting and treating outliers.

2. Data Integration

When datasets are collected from multiple sources databases, APIs, spreadsheets, or sensors they need to be combined into a coherent, unified dataset. This step involves:

- Merging datasets: Combining information from different sources while ensuring no data loss.

- Schema consistency: Aligning column names, formats, and data types.

- Resolving conflicts: Handling discrepancies such as mismatched identifiers or overlapping records.

Effective integration prevents data silos and ensures that all relevant information is available for model training.

3. Data Transformation

Once the data is cleaned and integrated, it often needs to be reshaped into a format suitable for algorithms. This includes:

- Normalization/Standardization: Bringing features to a common scale. Normalization (0–1 scaling) is common for neural networks, while standardization (z-score scaling) is often used in regression or clustering.

- Encoding categorical variables: Machine learning models require numerical input, so categorical features must be converted. Techniques include label encoding, one-hot encoding, and target encoding.

- Feature scaling: Essential for algorithms like KNN, SVM, and gradient descent-based models, which rely on distance or magnitude comparisons.

4. Data Reduction

Large datasets with high dimensionality can slow down computation and increase the risk of overfitting. Data reduction tackles this problem by:

- Dimensionality reduction techniques: Methods like PCA (Principal Component Analysis) and LDA (Linear Discriminant Analysis) reduce the number of features while preserving variance.

- Feature selection: Identifying and retaining only the most relevant features using statistical tests, correlation analysis, or feature importance scores.

- Removing redundancy: Eliminating highly correlated variables or unnecessary attributes to speed up computation.

5. Data Splitting

Finally, the dataset must be divided into subsets to train, validate, and test the machine learning model. Proper splitting ensures unbiased evaluation.

- Training set: Used to teach the model the underlying patterns.

- Validation set: Helps fine-tune hyperparameters and prevent overfitting.

- Testing set: Provides an independent evaluation of model performance.

- Stratified sampling: Ensures splits maintain class balance, especially for imbalanced datasets.

Data Preprocessing Examples and Techniques

To make raw data usable for machine learning, several preprocessing techniques are applied:

- Handling Missing Data: Missing values can bias predictions. For example, healthcare records may lack age or blood pressure. Common fixes include mean/median imputation for numbers, mode imputation for categories, regression-based predictions, or dropping unreliable rows/columns.

- Encoding Categorical Variables: Since algorithms need numbers, categorical values must be converted. For instance, “Gender” can be encoded as 0/1, while “City” can use one-hot encoding. Methods include label encoding (ordinal data), one-hot encoding (non-ordinal), and target encoding (high-cardinality features).

- Feature Scaling: Uneven scales can skew results, e.g., height in cm vs. weight in kg. Techniques include normalization (0–1 range) and standardization (mean 0, std dev 1). Scaling is critical for algorithms like KNN, SVM, and clustering.

- Dimensionality Reduction: High-dimensional data increases complexity and risk of overfitting. For example, image datasets with thousands of pixels can be reduced using PCA (Principal Component Analysis) while retaining key variance. Other methods include LDA and t-SNE.

- Outlier Detection and Treatment: Outliers, such as incorrect salary entries, can distort accuracy. Detection methods include Z-score and IQR, while fixes involve capping extreme values, transformations, or using domain expertise.

The generative AI market is experiencing a seismic growth trajectory, projected to surge from USD 71.36 billion in 2025 to USD 890.59 billion by 2032, at a CAGR of 43.4 % during the forecast period. (Markets and Markets)

Refer these below articles:

- Python Libraries for Machine Learning

- Artificial Intelligence and Neural Network

- How Gradient Boosting Works in Machine Learning

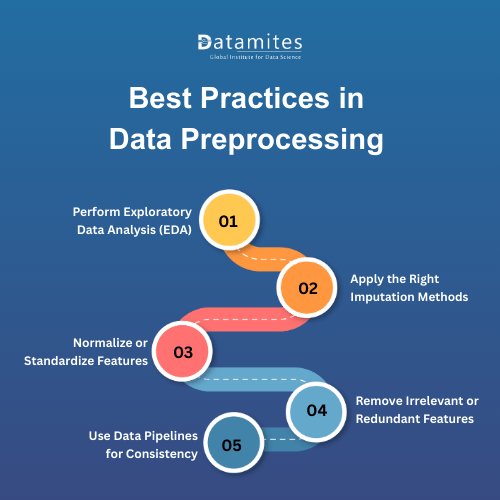

Best Practices in Data Preprocessing

Effective data preprocessing is essential for building accurate and scalable machine learning models. Following best practices ensures that data is clean, consistent, and ready for training.

1. Perform Exploratory Data Analysis (EDA)

Start with EDA to understand data distribution, detect missing values, spot outliers, and identify correlations. Tools like histograms, box plots, and heatmaps help decide on scaling or outlier handling.

2. Apply the Right Imputation Methods

Handle missing data carefully.

- Numerical features: Mean, median, regression, or k-NN imputation.

- Categorical features: Mode, predictive imputation, or adding an “Unknown” category.

3. Normalize or Standardize Features

Scaling ensures features contribute equally.

- Normalization (0–1): Useful for neural networks and distance-based algorithms.

- Standardization (Z-score): Effective for regression and classification models.

4. Remove Irrelevant or Redundant Features

Extra features may cause overfitting or slow training. Use feature selection methods like correlation analysis, RFE, or tree-based importance to retain only valuable variables.

5. Use Data Pipelines for Consistency

Automating preprocessing with pipelines (e.g., Scikit-learn, TensorFlow) ensures training and test data are transformed consistently, improving reproducibility and deployment readiness.

Data preprocessing in machine learning is one of the most vital stages of building successful AI and ML models. It ensures that raw, messy datasets are transformed into structured, clean, and meaningful input suitable for algorithms. By applying techniques such as data cleaning, transformation, reduction, and splitting, data scientists can significantly improve model accuracy and efficiency. While challenges exist, following best practices ensures robust and reliable outcomes.

Read these below articles:

Artificial Intelligence is reshaping industries such as IT, healthcare, finance, education, transportation, and the startup ecosystem in Hyderabad. By enhancing efficiency, enabling smarter decision-making, and fueling innovation, the city is rapidly positioning itself as one of India’s prominent AI hubs. For students and working professionals, enrolling in an Artificial Intelligence course in Hyderabad opens doors to exciting career opportunities in AI, machine learning, and data science.

DataMites is a leading training institute for Artificial Intelligence courses in Bangalore, along with programs in Machine Learning, Data Science, and other in-demand technologies. Learners gain access to globally recognized certifications accredited by IABAC and NASSCOM FutureSkills, supported by comprehensive career services that include resume building, mock interviews, and strong industry connections. Datamites Bangalore training centers located in Kudlu Gate, BTM Layout, and Marathahalli, DataMites provides both online and classroom learning options to suit diverse learning needs.