Python Libraries for Machine Learning

Discover the most essential Python libraries for machine learning, including NumPy, Pandas, Scikit-learn, TensorFlow, PyTorch, and more. Learn how they power ML pipelines and boost model performance.

Machine learning has become one of the most transformative technologies of the 21st century, driving innovations in healthcare, finance, retail, autonomous systems, and beyond. At the heart of this revolution lies Python, the most popular programming language for data science and machine learning. Its simplicity, extensive community support, and powerful libraries make it the first choice for both beginners and professionals. In this article, we will explore the essential Python libraries for machine learning, understand how they work together, and help you choose the right one for your project.

Why Python for Machine Learning?

Python’s dominance in the machine learning ecosystem is no accident. Its popularity comes from a blend of readability, flexibility, and a vast library ecosystem. Here are a few reasons why Python is the go-to language for ML:

- Ease of learning and syntax: Python code reads almost like English, making it accessible even to non-programmers.

- Rich ecosystem of libraries: From data preprocessing to deep learning, Python offers specialized libraries for every stage of machine learning.

- Strong community support: Python’s global developer and researcher community continuously contributes new tools, tutorials, and frameworks.

- Integration and scalability: Python integrates seamlessly with big data tools, cloud platforms, and other programming languages, making it scalable for real-world applications.

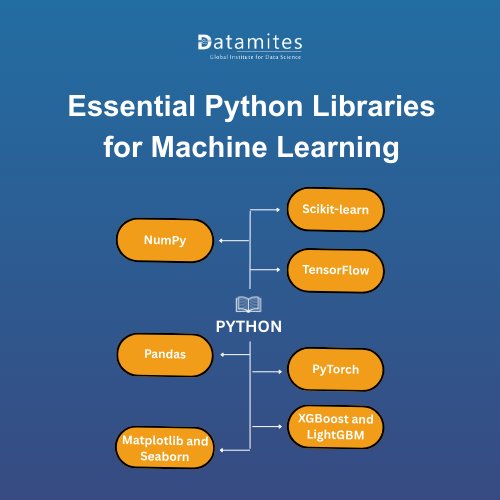

Essential Python Libraries for Machine Learning

When it comes to machine learning, certain Python libraries stand out as industry standards. Below are the most widely used ones with real-world examples:

1. NumPy

NumPy is the backbone of numerical computing in Python. It provides fast, efficient array operations, which are the foundation of most machine learning models.

Example use case: NumPy is often used in image processing tasks where pixel values are stored as arrays. For example, in facial recognition systems, images are converted into NumPy arrays to perform matrix operations efficiently.

2. Pandas

Pandas simplifies data manipulation and analysis. It introduces powerful data structures such as DataFrames, making it easy to clean, explore, and prepare data for modeling.

Example use case: In the financial sector, Pandas is used to clean and analyze stock market data. Analysts can easily handle missing data, merge multiple datasets, and calculate performance indicators before feeding them into machine learning models for price prediction.

3. Matplotlib and Seaborn

Data visualization is critical in machine learning, and these libraries are perfect for it.

- Matplotlib: Provides basic plotting functions to create graphs, charts, and plots.

- Seaborn: Built on top of Matplotlib, it offers more advanced statistical plots with simpler syntax.

Example use case: Healthcare researchers use Matplotlib and Seaborn to visualize patient data, such as blood pressure or cholesterol levels, to identify trends. These visualizations make it easier to detect risk factors before applying predictive models.

4. Scikit-learn

Scikit-learn is the most widely used machine learning library for beginners and professionals.

Example use case: E-commerce companies use Scikit-learn to build recommendation systems. By analyzing customer purchase history, the library’s clustering and classification algorithms can predict products users are likely to buy next.

5. TensorFlow

Developed by Google, TensorFlow is a deep learning powerhouse.

Example use case: TensorFlow powers advanced AI applications like voice assistants (Google Assistant, Siri). It enables the training of deep neural networks that can understand speech, convert it into text, and even generate human-like responses.

6. PyTorch

PyTorch, developed by Facebook, is another deep learning favorite.

Example use case: PyTorch is widely used in computer vision research. For instance, it has been used in projects like autonomous driving, where cars detect pedestrians, traffic lights, and road signs in real time. Its flexibility makes it popular among researchers creating innovative AI models.

7. XGBoost and LightGBM

For gradient boosting and structured data problems, these libraries shine.

- XGBoost: Highly efficient and accurate, widely used in Kaggle competitions.

- LightGBM: Optimized for speed and scalability with large datasets.

Example use case: In the banking industry, XGBoost and LightGBM are used for fraud detection. By analyzing thousands of transaction records, these models can quickly detect unusual patterns that may indicate fraudulent activity. LightGBM, in particular, is preferred when banks deal with millions of records daily due to its speed.

How These Libraries Work Together in ML Pipelines

A machine learning project rarely relies on a single library. Instead, it follows a pipeline where multiple tools work together to turn raw data into meaningful predictions. Python’s vast library ecosystem supports this process, making ML development faster and more efficient. With global AI investments projected to reach $200 billion by 2025, the demand for robust Machine Learning tools and infrastructure continues to grow.

Here’s how these libraries typically fit together in a real-world ML workflow:

1. Data Preparation with NumPy and Pandas

Before building any model, raw data must be structured, cleaned, and formatted.

- NumPy provides the mathematical foundation with its high-performance arrays and numerical computations.

- Pandas takes it a step further by offering powerful data structures like DataFrames that make it easy to handle missing values, merge datasets, and extract meaningful features.

2. Visualization with Matplotlib and Seaborn

Once the data is clean, the next step is to understand patterns hidden inside it.

- Matplotlib is great for simple line charts, bar graphs, or scatter plots.

- Seaborn, with its higher-level interface, helps visualize distributions, correlations, and trends with aesthetically pleasing plots.

3. Modeling with Scikit-learn, TensorFlow, and PyTorch

With the data prepared and insights in place, the pipeline moves on to model building.

- Scikit-learn is the go-to tool for classical machine learning models like linear regression, decision trees, random forests, or clustering algorithms.

- For more advanced problems like image recognition, natural language processing, or recommendation systems, deep learning comes into play with TensorFlow or PyTorch.

4. Boosting Performance with XGBoost and LightGBM

For many real-world projects, accuracy and efficiency are critical. This is where boosting libraries step in.

- XGBoost is widely known for winning Kaggle competitions because of its accuracy on structured data.

- LightGBM is designed for speed and can handle massive datasets without slowing down.

5. Deployment and End-to-End Integration

After training, models need to be deployed so they can make predictions in real time. Python libraries integrate smoothly for this step too:

- TensorFlow models can be deployed on cloud platforms like Google Cloud AI or TensorFlow Serving.

- Scikit-learn models can be easily wrapped into web applications using Flask or FastAPI.

- Pandas and NumPy continue to play a role in handling incoming data streams during inference.

The global chatbot market size was estimated at USD 5.4 billion in 2023 and is projected to reach USD 15.5 billion by 2028, growing at a CAGR of 23.3% from 2023 to 2028 according to Markets and Markets.

Refer these below articles:

- Artificial Intelligence and Neural Network

- How Gradient Boosting Works in Machine Learning

- Generative AI and Predictive AI: Key Differences Explained

Choosing the Right Library for Your Project

With so many options, how do you pick the right library? Consider these factors:

- Nature of the task: For deep learning tasks like image recognition, use TensorFlow or PyTorch. For classical ML problems, Scikit-learn is often enough.

- Dataset size: Large datasets may require LightGBM or XGBoost for efficiency.

- Ease of use vs. flexibility: Scikit-learn is simple, while PyTorch offers more customization.

- Community and support: TensorFlow and PyTorch have vast resources, making them great for long-term projects.

Read these below articles:

Python has revolutionized the way machine learning is practiced, thanks to its powerful and easy-to-use libraries. From NumPy and Pandas for data manipulation, to Scikit-learn for traditional ML, and TensorFlow or PyTorch for deep learning, Python provides a complete toolkit for every stage of the ML workflow. By understanding the strengths of each library and how they fit into pipelines, you can choose the right tools to maximize your project’s success.

Bangalore (Bengaluru) has rapidly emerged as one of India’s and Asia’s most prominent hubs for artificial intelligence . The city’s strength lies in its blend of top academic institutions, a vast pool of skilled talent, a thriving startup culture, strong investor backing, and supportive government policies. In this dynamic ecosystem, pursuing an Artificial Intelligence course in Bangalore can be highly rewarding. With rising demand for AI professionals, attractive salary prospects, and a wide range of learning opportunities, the right course can provide a powerful boost to your career growth.

DataMites Artificial Intelligence Course in Chennai offers a comprehensive AI training program designed for both beginners and experienced professionals. The curriculum focuses on practical learning through real-world projects, expert mentorship, and dedicated placement support ideal for those looking to advance in Chennai’s rapidly growing tech ecosystem. Learners earn internationally recognized certifications from IABAC and NASSCOM FutureSkills, strengthening their professional credentials and unlocking better career opportunities in the competitive AI industry.