Recursive Neural Network in Deep Learning

Explore Recursive Neural Networks in deep learning and their role in processing hierarchical and structured data. Learn how RNNs differ from CNNs and their applications in NLP, program analysis, and AI-driven solutions.

Deep learning has revolutionized the way machines understand and process complex data, especially in areas like natural language processing (NLP), image recognition, and computer vision. Among the many deep learning models, the Recursive Neural Network (RvNN) stands out for its ability to capture hierarchical structures in data. Unlike traditional feed-forward networks, recursive neural networks excel when working with structured inputs such as trees, sentences, or nested data. In this article, we will explore what a recursive neural network is, its architecture, working mechanism, applications, and how it differs from other neural networks like CNNs.

What is a Recursive Neural Network?

A Recursive Neural Network (RvNN) is a specialized type of artificial neural network that applies the same set of weights recursively over structured inputs such as trees or graphs. Unlike traditional feedforward networks that process data in a linear sequence, recursive neural networks operate on hierarchical structures, making them particularly effective for tasks where the relationships and dependencies within the data are crucial.

RvNNs are widely used in scenarios where input data naturally forms a tree-like structure, such as parsing sentences in natural language processing (NLP), modeling mathematical expressions, or analyzing program source code. By processing inputs recursively, these networks are able to capture relationships between nodes and generate meaningful representations of entire structures.

According to Grand View Research, the global computer vision market was valued at USD 19.82 billion in 2024 and is projected to grow to USD 58.29 billion by 2030, expanding at a CAGR of 19.8% from 2025 to 2030. This rapid growth highlights the increasing importance of advanced deep learning models, including recursive neural networks, in driving innovation across industries.

Architecture of Recursive Neural Networks

The architecture of a recursive neural network is built around the concept of recursively combining child nodes to form a parent node representation. Here's a breakdown of its key components:

- Input Layer: The network receives input in the form of vectors representing the nodes of a tree or graph structure.

- Hidden Layer: Each parent node computes its hidden state by combining the states of its child nodes using a shared weight matrix and a non-linear activation function.

- Output Layer: The network produces outputs for tasks such as classification, regression, or structured prediction.

- Weight Sharing: A single set of parameters is applied recursively, which helps the network generalize well across different parts of the structure.

This architecture allows recursive neural networks to efficiently learn hierarchical representations from complex structured data, giving them an edge over traditional neural network models.

Refer these below articles:

- Generative Adversarial Networks (GANs)

- What Is Vertex Artificial Intelligence?

- What is Simple Linear Regression

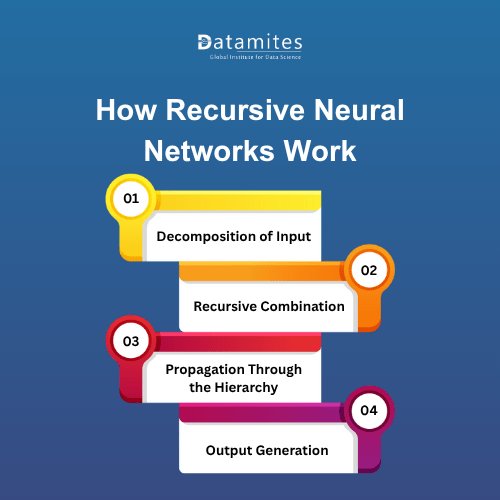

How Recursive Neural Networks Work

Recursive Neural Networks (RvNNs) are designed to process hierarchical and tree-structured data rather than simple sequences. Their working mechanism can be understood as a step-by-step recursive process where smaller units are combined into larger representations until a complete understanding of the input is formed. Let’s break it down:

1. Decomposition of Input

The first step involves breaking down the structured input into its smallest meaningful units.

- In Natural Language Processing (NLP), this means splitting a sentence into words and then mapping it to a syntactic parse tree.

- In mathematical expressions, the decomposition follows operator precedence, resulting in a tree representation.

- In computer vision, a scene might be decomposed into smaller regions or objects.

This step ensures that the network starts with the simplest possible components before building complexity.

2. Recursive Combination

Each pair of child nodes in the structure is recursively combined using a shared neural function (with the same set of weights applied across the tree). This process creates a parent node representation that captures the meaning of its children.

- For example, combining two words forms a phrase embedding.

- Combining phrases results in clause-level understanding.

- Eventually, clauses merge to represent the full sentence.

The recursive combination is what allows RvNNs to capture hierarchical relationships in a way that traditional neural networks cannot.

3. Propagation Through the Hierarchy

The representations are propagated upward in the tree structure. Each new parent node becomes a child for the next recursive step. This bottom-up propagation continues until the entire structure is represented by a single root node.

- At this stage, the root node vector encodes the semantic or structural meaning of the entire input.

- This hidden representation acts as a compact summary of the hierarchical data.

4. Output Generation

Once the root node is computed, the network produces its final output. This could be:

- Classification: Sentiment prediction for a sentence (positive/negative).

- Regression: Predicting numerical values like scores.

- Feature Extraction: Passing the learned embeddings to another model for downstream tasks.

The output depends on the problem domain, but it always leverages the hierarchical embedding built through recursion.

By recursively combining subcomponents, the network effectively learns hierarchical patterns and dependencies, which is crucial for tasks like sentiment analysis or parsing complex structures.

Applications of Recursive Neural Networks

Recursive neural networks have a wide range of applications in deep learning, particularly in areas requiring structured data interpretation:

- Natural Language Processing (NLP): For tasks such as sentiment analysis, question answering, and semantic parsing.

- Mathematical Expression Evaluation: Solving symbolic expressions using hierarchical tree structures.

- Program Analysis: Understanding and predicting patterns in source code structures.

- Image Parsing: Decomposing images into hierarchically structured segments for analysis.

- Knowledge Representation: Building knowledge graphs and hierarchical representations for AI systems.

The versatility of recursive neural networks (RvNNs) makes them an essential tool for AI researchers and practitioners dealing with structured and hierarchical data. According to ABI Research, The Artificial Intelligence (AI) software market size was valued at US$122 billion in 2024.Growing at a Compound Annual Growth Rate (CAGR) of 25%, the AI software market size will reach US$467 billion in 2030. This rapid expansion underscores the rising importance of advanced deep learning models like recursive neural networks in shaping the future of artificial intelligence.

Read these below articles:

Difference Between Recursive Neural Network and CNN

Although both recursive neural networks (RvNNs) and convolutional neural networks (CNNs) are powerful in deep learning, they serve different purposes:

Data Structure:

- RvNN: Works best with tree-structured or hierarchical data.

- CNN: Excels with grid-like data such as images or time-series.

Computation Flow:

- RvNN: Recursively applies the same weights in a hierarchical structure.

- CNN: Uses filters/kernels to capture local spatial features.

Applications:

- RvNN: Ideal for NLP, parsing, and structured representation tasks.

- CNN: Best for image recognition, computer vision, and spatial pattern detection.

Representation Power:

- RvNN captures long-range hierarchical dependencies,

- CNN captures local spatial correlations.

Advantages of Recursive Neural Networks

Recursive neural networks offer several advantages over traditional neural network architectures:

- Hierarchical Understanding: They can effectively capture hierarchical structures in data.

- Parameter Efficiency: Shared weights reduce the number of parameters and improve generalization.

- Flexibility: Applicable to any structured data, not just sequences or grids.

- Better Representations: Generate meaningful embeddings for nodes and entire structures.

- High Accuracy in NLP Tasks: Particularly powerful for tasks like sentiment analysis, semantic parsing, and more.

These advantages make recursive neural networks a preferred choice when dealing with structured and complex datasets.

Recursive neural networks are transforming the way deep learning models understand structured data. By applying weights recursively over tree-like structures, they capture hierarchical relationships that traditional networks often miss. With applications ranging from natural language processing to program analysis, RNNs have established themselves as an essential tool for AI researchers and developers.

Artificial Intelligence is transforming sectors like IT, healthcare, finance, education, transportation, and even the growing startup ecosystem in Hyderabad. By driving efficiency, improving decision-making, and fostering innovation, the city is emerging as one of India’s leading hubs for AI development. For both students and professionals, pursuing an Artificial Intelligence course in Hyderabad can unlock promising career paths in AI, machine learning, and data science.

DataMites is a leading training institute for Artificial Intelligence courses in Bangalore, along with programs in Machine Learning, Data Science, and other in-demand technologies. Learners gain access to globally recognized certifications accredited by IABAC and NASSCOM FutureSkills, supported by comprehensive career services that include resume building, mock interviews, and strong industry connections. Datamites Bangalore training centers located in Kudlu Gate, BTM Layout, and Marathahalli, DataMites provides both online and classroom learning options to suit diverse learning needs.