Reinforcement Learning: Basics and Applications

Reinforcement Learning (RL) is a type of machine learning where agents learn by interacting with their environment to maximize rewards. This blog explores its fundamentals and real-world applications across various industries.

Have you ever wondered how a self-driving car knows when to stop, turn, or accelerate? Or how a video game character learns to adapt to your moves? These advancements are possible thanks to reinforcement learning (RL), a branch of artificial intelligence that enables machines to make decisions in complex, changing environments.

Reinforcement learning isn’t just a niche topic in AI; it’s a game-changer. From robotics to finance, RL holds transformative potential across numerous fields. This blog will cover RL fundamentals, essential algorithms, real-world applications, and emerging trends. By the end, you’ll have a solid understanding of how RL works and why it’s so essential to the future of AI, especially for those pursuing an artificial intelligence course.

What is Reinforcement Learning?

Reinforcement Learning (RL) is a branch of machine learning in which an agent acquires decision-making skills through its interactions with an environment. It learns from the consequences of its actions, aiming to achieve the highest possible reward over time.

Reinforcement Learning is a computational approach where an agent takes actions in an environment, observes the resulting states and rewards, and uses this information to develop a policy (a strategy) that maximizes cumulative rewards over time. Formally, RL is often modeled as a Markov Decision Process (MDP) involving a sequence of states, actions, rewards, and transitions.

Key Components of Reinforcement Learning: The Agent-Environment Cycle

Reinforcement Learning (RL) is a crucial field within machine learning that centers on training agents to make decisions by exploring and learning from their experiences, ultimately aiming to maximize their total rewards. A central concept in RL is the Agent-Environment Cycle, which describes the interaction between the agent (the learner or decision-maker) and the environment (the world with which the agent interacts). Here are the key components of this cycle:

- Agent: The decision-maker that takes actions to maximize cumulative rewards, learning from past experiences.

- Environment: The external system reacting to the agent’s actions, with rules and dynamics the agent can observe but not control.

- Actions: The choices available to the agent, which can be discrete (limited options) or continuous (a range). Actions lead to changes in state and rewards.

- States: Represent the current situation within the environment, guiding the agent's next action.

- Rewards: Immediate feedback on the value of an action; higher rewards reinforce favorable actions.

Refer these articles:

- Deep Learning Explained: How It Works

- Introduction to Deep Learning and Neural Networks

- Computer Vision: An Overview of Techniques and Applications

Goal of Reinforcement Learning vs. Other ML Types

Reinforcement Learning focuses on maximizing cumulative rewards through trial and error, unlike supervised learning (which relies on labeled data) and unsupervised learning (which seeks patterns without specific goals). RL emphasizes sequential decision-making and goal-oriented learning, making it a vital topic in any artificial intelligence course.

Core Concepts in Reinforcement Learning

Reinforcement Learning (RL) is a branch of machine learning that explores how agents should act within an environment to optimize their total rewards over time. Here are some core concepts in reinforcement learning:

1. Reward and Punishment: Learning from Feedback

Agents learn through positive and negative rewards, where positive feedback encourages certain actions and negative feedback discourages others. This feedback loop enables agents to adapt and maximize cumulative rewards over time, similar to human learning through rewards and punishments.

2. Exploration vs. Exploitation Dilemma

This dilemma involves balancing two strategies:

- Exploration: Trying new actions to find potentially better strategies.

- Exploitation: Leveraging established behaviors that produce significant benefits. Striking the right balance is crucial, as too much exploitation can hinder optimal strategy discovery, while excessive exploration can lead to suboptimal rewards.

3. Markov Decision Process (MDP)

MDPs provide a mathematical framework for decision-making in RL, consisting of:

- States (S): All possible situations in the environment.

- Actions (A): Choices available to the agent.

- Transition Probabilities (P): Likelihood of moving between states after an action.

Rewards (R): Feedback based on actions taken. - The Markov property asserts that outcomes depend only on the current state, not on previous states.

4. Q-Learning & Policy Gradients

- Q-Learning: A value-based algorithm where agents learn Q-values for actions in each state, representing expected future rewards. It’s effective in environments with discrete states and actions.

- Policy Gradients: These methods optimize the agent's policy directly, maximizing expected rewards through a probabilistic approach, making them suitable for environments with continuous actions or when a direct action policy is advantageous.

The Building Blocks of Reinforcement Learning

Reinforcement Learning (RL) is a robust machine learning approach where an agent develops decision-making skills by engaging with an environment to accomplish specific objectives. Below are the essential components that form the foundation of RL:

The Agent-Environment Interaction

In reinforcement learning, the interaction between the agent and the environment forms a continuous feedback loop. The agent perceives its current situation within the environment, executes a specific action, and is then given a reward. This reinforces certain actions based on their outcomes and is central to how the agent improves over time. Here’s how the loop works:

- Step 1: Agent perceives the environment (state).

- Step 2: Agent takes an action based on its current state.

- Step 3: The environment responds, resulting in an updated state for the agent along with a corresponding reward.

- Step 4: The agent updates its strategy based on the outcome.

Rewards and Value Functions

Rewards are the guiding signals in RL, determining which actions are beneficial. An action that yields high rewards is encouraged, while one with low or negative rewards is discouraged. However, agents must also consider future rewards, which is where value functions come in. These functions help agents estimate the value of states or actions, enabling long-term strategic thinking rather than short-term gains.

Challenges in Reinforcement Learning

RL isn’t without its challenges. Some key issues include:

- Credit Assignment Problem: Determining which action led to a reward, especially in delayed feedback situations.

- Sparse Rewards: When rewards are rare, it’s difficult for the agent to learn effectively.

Solutions like reward shaping (adding intermediate rewards) help address these challenges, guiding the agent more effectively toward its goal.

Refer these articles:

- Why Artificial Intelligence Career in 2024

- What Is AI Engineer Course

- Artificial Intelligence Career Scope in India

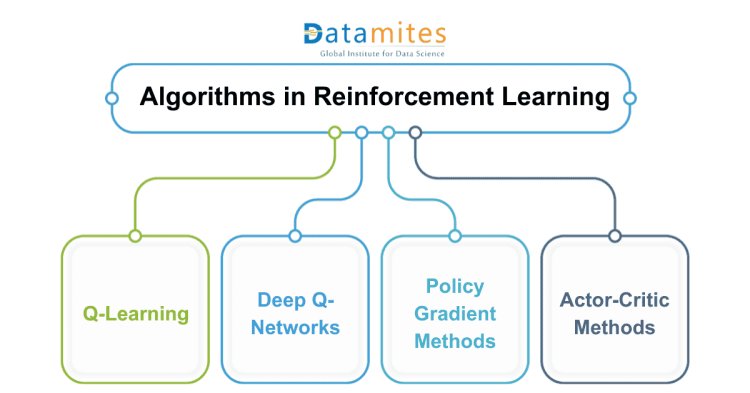

Popular Algorithms in Reinforcement Learning

Reinforcement learning (RL) has seen significant advancements in recent years, leading to the development of several popular algorithms that have been successfully applied to various domains. Here's an overview of some of the most well-known algorithms in reinforcement learning:

Overview of Key Reinforcement Learning Algorithms

Each RL algorithm brings unique strengths to the table. Here’s a quick rundown:

- Q-Learning: Acquires a Q-table that outlines the best actions to take for each state.

- Deep Q-Networks (DQN): Combines Q-learning with neural networks to manage large, complex environments.

- Policy Gradient Methods: Adjusts the policy directly, making it ideal for continuous action spaces.

- Actor-Critic Methods: Uses both an actor (policy) and a critic (value function) for balanced learning.

Real-World Analogy for Each Algorithm

Grasping these algorithms becomes simpler when we relate them to everyday examples:

- Q-Learning: Think of it as a table of best moves, where each entry is learned over time.

- DQN: Similar to learning strategies with intuition, but using layers of learning to improve accuracy.

- Policy Gradients: Like honing a skill or intuition that constantly improves.

- Actor-Critic: Picture this as having a coach (critic) who assesses performance while you (actor) play.

Applications of Reinforcement Learning

Reinforcement Learning (RL) has gained significant traction across various domains due to its ability to learn optimal actions through interactions with an environment. Here are several important uses of reinforcement learning:

Robotics

RL has made significant strides in robotics, enhancing robot navigation and manipulation skills. In manufacturing, robots use RL to optimize paths, reducing time and errors.

Real-world examples include:

- Drones that autonomously modify their flight routes on the fly.

- Warehouse robots that streamline inventory sorting and retrieval.

Gaming

RL’s role in gaming is transformative, as seen with AlphaGo, where RL-powered agents now challenge human champions in games like chess, Go, and Dota.

Impacts in gaming:

- AI-driven opponents that adapt to players’ styles.

- Dynamic game worlds that adjust difficulty and interactions based on player actions.

Finance

In finance, reinforcement learning (RL) is employed to develop trading strategies, allowing algorithms to analyze market patterns and optimize returns. From stock prediction to portfolio management, RL adapts to market shifts.

Applications include:

- Automated trading: Algorithms learn profitable trading patterns.

- Risk management: RL helps in assessing risks and making more secure investments.

Healthcare

RL’s potential in healthcare is substantial, contributing to treatment personalization and drug discovery. It assists in creating adaptive care plans based on individual patient responses.

Notable applications:

- Personalized treatments: Algorithms tailor treatment plans in real-time.

- Drug discovery: RL accelerates the identification of promising drug candidates.

Autonomous Vehicles

RL is a cornerstone of autonomous vehicle technology, enabling cars to learn navigation, obstacle detection, and route optimization.

Key areas include:

- Self-driving algorithms: Teaching cars to react to traffic and environmental changes.

- Dynamic route planning: Enhancing route selection in real-time by considering current traffic conditions.

Refer these articles:

- Artificial Intelligence Career Scope in Hyderabad

- Artificial Intelligence Career Scope in Pune

- Artificial Intelligence Career Scope in Chennai

Future of Reinforcement Learning in Business and Industry

The future of reinforcement learning (RL) in business and industry looks promising, driven by advancements in technology and increasing data availability. Here are some key areas where RL is expected to make a significant impact:

Recent Advances and Trends

The future of RL is bright, with innovations like transfer learning, which combines RL with other machine learning techniques. This trend aims to improve RL’s adaptability and applicability across domains.

Recent developments include:

- Transfer learning: Improving reinforcement learning's capacity to transfer knowledge across different environments.

- Multi-agent RL: Where multiple agents collaborate or compete to solve problems.

Role of RL in Industry 4.0

As industries digitize, RL plays a vital role in automation, optimization, and intelligence. RL enables smarter machinery, predictive maintenance, and customer personalization.

Key industries include:

- Manufacturing: RL enhances automation in production lines.

- Energy: Enhances the operation of power grids by minimizing waste and boosting efficiency.

Reinforcement Learning represents a powerful tool in the evolution of AI, with applications stretching from gaming to healthcare and autonomous vehicles. With hands-on artificial intelligence training, enthusiasts and professionals alike can explore this dynamic field. For those interested, further learning will open doors to endless innovation.

DataMites institute is a premier provider of data science and analytics training, offering a comprehensive range of courses, including Data Science, Data Analytics, Machine Learning, Artificial Intelligence, and Business Analytics. Our Artificial Intelligence Engineer course is designed to equip learners with cutting-edge skills in AI. Accredited by IABAC and NASSCOM FutureSkills, DataMites emphasizes practical learning through hands-on projects and real-world case studies, guided by expert mentors. With over 70,000 successful learners, we are committed to bridging the gap between theoretical knowledge and industry application, empowering professionals to thrive in the dynamic data landscape and advance their careers.

DataMites Training Institute offers a comprehensive Artificial Intelligence course accredited by global certifications, ensuring industry recognition. The program covers machine learning, deep learning, and AI tools with hands-on projects and expert guidance. It provides flexible learning modes, including online and classroom training, catering to beginners and professionals. With globally recognized certification, DataMites empowers learners for AI-driven career opportunities.