Small Language Models are the Future of Agentic AI

Learn why SLMs are the key to building efficient, adaptive, and intelligent autonomous systems of the future.

Artificial Intelligence has evolved rapidly over the past few years, driven largely by massive Large Language Models (LLMs) like GPT-4 and Gemini. These models have demonstrated remarkable reasoning, creativity, and problem-solving capabilities. However, as industries begin deploying AI at scale, the need for efficiency, privacy, and customization is reshaping the future of intelligent systems.

This shift marks the rise of Small Language Models (SLMs) compact, efficient, and domain-adapted models designed for agility and autonomy. Unlike massive LLMs that require vast computational resources, SLMs can power Agentic AI intelligent agents capable of reasoning, decision-making, and executing tasks independently.

In this article, we’ll explore why Small Language Models are the future of Agentic AI, how they enable scalable automation, and the technologies making them a reality.

Defining Small Language Models (SLMs)

Small Language Models are lightweight AI models trained to deliver high performance with lower computational costs. Typically ranging between 1B to 10B parameters, they are optimized for specific tasks, faster inference, and local or on-device deployment.

While they may not match the raw general intelligence of larger models, SLMs excel in focused problem-solving through fine-tuning and context-aware reasoning. Modern examples include Phi-3, Mistral, Gemma, and LLaMA 3 (8B) all capable of impressive accuracy despite their smaller size.

By utilizing advanced techniques such as model distillation, quantization, and retrieval augmentation, small language models (SLMs) deliver high performance while requiring only a fraction of the energy and computational resources needed by traditional large language models (LLMs). According to ABI Research, the Artificial Intelligence (AI) software market was valued at US$122 billion in 2024 and is projected to grow at a compound annual growth rate (CAGR) of 25%, reaching US$467 billion by 2030.

Refer these below articles:

- Multimodal Neurons in Artificial Neural Networks

- How Does Artificial Intelligence Help Astronomy?

- List Of Generative Adversarial Networks Applications

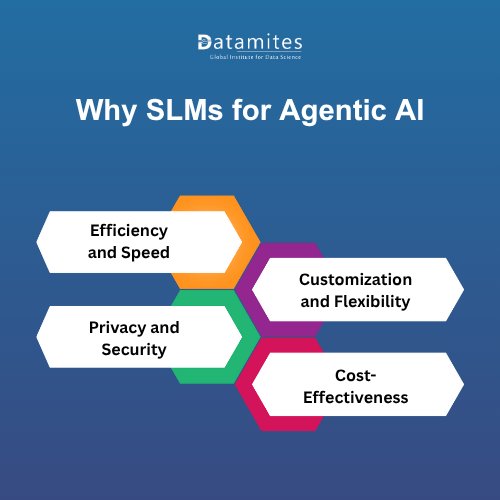

Why SLMs Are Better Suited for Agentic AI

Agentic AI systems autonomous AI agents that think, plan, and act need to be responsive, efficient, and adaptable. Here’s why SLMs are ideal for this new paradigm:

1. Efficiency and Speed: SLMs deliver low-latency responses, essential for real-time decision-making and multi-agent coordination. Their smaller architecture enables deployment on edge devices or private servers, ensuring faster execution without cloud dependency.

2. Customization and Flexibility: Small models can be easily fine-tuned for industry-specific or task-specific applications. This makes them perfect for agentic systems that need to specialize such as a research assistant, workflow manager, or conversational AI agent.

3. Privacy and Security: With the ability to run locally, SLMs ensure data sovereignty and confidentiality, a key factor in sectors like finance, healthcare, and defense where sensitive information cannot leave secure environments.

4. Cost-Effectiveness: SLMs drastically reduce infrastructure, training, and maintenance costs, making advanced AI accessible to startups, small businesses, and individual creators.

According to Markets and Markets, the global chatbot market was valued at USD 5.4 billion in 2023 and is projected to reach USD 15.5 billion by 2028, growing at a CAGR of 23.3% between 2023 and 2028.

Technological Enablers of SLM-Based Agentic Systems

The growth of SLMs in agentic AI is supported by several key innovations:

1. Model Distillation and Quantization

One of the biggest challenges in AI development is balancing performance with efficiency.

Model distillation and quantization solve this problem by compressing large models into smaller ones without losing their essential capabilities.

- Model Distillation: In this process, a large “teacher” model transfers its knowledge to a smaller “student” model. The smaller model learns to replicate the outputs and reasoning of the larger one but requires far less computational power.

- Quantization: This technique reduces the precision of model parameters (for example, from 32-bit to 8-bit), significantly lowering memory usage and improving inference speed while maintaining acceptable accuracy.

Together, these methods make SLMs faster, lighter, and more cost-effective, ideal for powering autonomous AI agents that must respond instantly and operate on limited hardware.

2. Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) has emerged as a cornerstone of modern AI systems, bridging the gap between static model knowledge and dynamic real-world data.

Instead of relying solely on pre-trained parameters, RAG-enabled SLMs can retrieve relevant information from external databases, APIs, or document repositories in real time. This gives smaller models access to up-to-date, contextually rich information without retraining.

In agentic systems, RAG allows SLM-based agents to:

- Pull in live data from enterprise systems or the web.

- Ground their responses in factual, domain-specific knowledge.

- Continuously improve decision-making and reasoning accuracy.

This approach ensures that even compact models remain intelligent, context-aware, and reliable.

3. Knowledge Graphs and Memory Systems

For AI agents to function autonomously, they must be capable of storing, recalling, and reusing contextual knowledge much like human memory.

Knowledge graphs provide structured representations of relationships between concepts, allowing SLMs to reason more effectively about connections and dependencies. Meanwhile, memory systems enable agents to retain information across interactions, remember prior actions, and adapt based on user preferences or past performance.

When combined, these technologies transform SLMs into continuously learning agents that can evolve over time rather than restarting from zero with each task.

4. Advanced Agent Frameworks

Frameworks such as LangChain, AutoGen, and Hugging Face Transformers Agents are revolutionizing how SLMs are deployed and orchestrated.

These platforms act as coordination layers that connect multiple small models, enabling them to communicate, delegate tasks, and collaborate effectively. For instance:

- LangChain provides tools for building multi-agent pipelines and integrating data retrieval.

- AutoGen enables autonomous interactions between agents to achieve complex goals.

- Hugging Face Agents simplify the creation and management of lightweight AI assistants.

By leveraging these frameworks, developers can create multi-agent systems that are scalable, modular, and capable of performing intricate workflows autonomously.

Read these below articles:

Industry Applications of Small Language Models and Use Cases

The fusion of Small Language Models (SLMs) and Agentic AI is driving innovation across industries by enabling efficient, secure, and scalable automation.

1. Enterprise Automation

SLM-powered AI agents streamline enterprise workflows by automating repetitive and cognitive tasks. Fine-tuned for specific business functions, they deliver:

- Process Automation: Document handling, data analysis, and reporting.

- Decision Support: Predictive analytics and strategic insights.

- Collaborative AI: Seamless coordination across teams and operations.

These models enable 24/7 autonomous systems that boost productivity and accuracy.

2. Healthcare

In healthcare, SLMs support privacy-focused, real-time intelligence on local devices or secure servers. Key uses include:

- On-Device Medical Assistants for diagnostics and health guidance.

- Clinical Decision Support through data-driven recommendations.

- Efficient Data Management of medical records and reports.

They empower faster, personalized care while maintaining HIPAA and GDPR compliance.

3. Finance

SLM-based Agentic AI strengthens data security and efficiency in finance. Use cases include:

- Risk Detection & Fraud Prevention in real time.

- Regulatory Compliance Monitoring.

- Personalized Virtual Banking Agents for customer support.

SLMs operate securely on-premises, ensuring data confidentiality and reduced cloud reliance.

4. IoT and Edge Computing

Lightweight SLM agents power intelligent edge and IoT ecosystems by processing data locally. They enable:

- Smart Infrastructure Management in utilities and transport.

- Autonomous Robotics for drones and machines.

- Predictive Maintenance to reduce downtime.

These models enhance real-time decision-making and reduce energy costs.

5. Education and Customer Support

Education and customer experience are being reshaped by personalized AI assistants built on Small Language Models.

Unlike large, generalized systems, SLMs can be fine-tuned for specific curricula, learning styles, or brand communication standards.

Notable applications include:

- AI Tutors: Offering adaptive learning experiences tailored to each student’s progress.

- Conversational Chatbots: Providing instant support, resolving queries, and improving customer satisfaction.

- Content Generation: Assisting educators in creating study materials, quizzes, and feedback reports.

Because SLMs are lightweight and cost-efficient, they make AI-driven personalization accessible to schools, startups, and enterprises alike.

The next era of AI won’t be defined by model size, but by efficiency, adaptability, and intelligence at scale. Small Language Models represent the future of Agentic AI enabling faster, safer, and more cost-effective deployment of intelligent agents across every domain. As research advances and new frameworks emerge, SLMs will form the foundation for truly autonomous and distributed AI ecosystems.

Start your Artificial Intelligence journey today and acquire the skills that employers are actively seeking. Enrolling in an Artificial Intelligence Course in India, equips you with practical knowledge, hands-on project experience, and expert career guidance, helping you confidently step into this rapidly expanding field. With the surging need for artificial intelligence talent, the right training can unlock diverse and rewarding career paths.

Hyderabad, India’s “Cyber City”, has become a leading hub for Artificial Intelligence (AI) education. With global tech giants like Microsoft, Google, Amazon, and Facebook alongside innovative AI startups, the city offers a thriving ecosystem for tech learners. Artificial Intelligence course in Hyderabad opens opportunities for roles such as AI Engineer, Data Scientist, Machine Learning Specialist, and AI Researcher. Whether you’re a student or professional, learning AI here provides the skills, exposure, and career edge needed to thrive in today’s AI-driven world.

The DataMites Artificial Intelligence Course in Ahmedabad offers a comprehensive program tailored for both beginners and experienced professionals. The curriculum emphasizes hands-on learning through real-world projects, guided by expert industry mentors. With strong placement support, the course prepares learners to thrive in Ahmedabad’s growing technology ecosystem. Upon completion, participants earn internationally recognized certifications from IABAC and NASSCOM FutureSkills, boosting their professional credibility and opening pathways to rewarding AI career opportunities in the competitive tech industry.