Genetic Algorithm in Machine Learning

Discover how Genetic Algorithm in Machine Learning helps optimize models, enhance performance, and solve complex problems through evolutionary techniques.

The field of machine learning is constantly evolving with innovative techniques that improve accuracy, efficiency, and adaptability. Among the many optimization methods, Genetic Algorithms (GAs) stand out as a powerful approach inspired by Charles Darwin’s theory of natural evolution. By mimicking biological processes such as selection, crossover, and mutation, GAs provide intelligent solutions to highly complex problems where traditional methods may fall short.

In machine learning, genetic algorithms are widely used for feature selection, hyperparameter tuning, model optimization, and solving combinatorial challenges. Their ability to adapt and evolve over iterations makes them especially valuable in scenarios where the search space is vast and traditional gradient-based methods may not work effectively.

In this article, we’ll explore the fundamentals of genetic algorithms, their working mechanism, applications in machine learning, comparisons with other optimization techniques, and real-world examples that highlight their practical importance.

Fundamentals of Genetic Algorithms

A Genetic Algorithm (GA) is a search-based optimization technique rooted in evolutionary biology. First introduced by John Holland in the 1970s, GAs rely on the principles of natural selection and genetics to solve optimization and search problems.

At the core of GAs lies the concept of population (a set of potential solutions) and fitness function (a measure to evaluate solution quality). Over successive generations, the machine learning algorithm applies evolutionary operators such as selection, crossover, and mutation to evolve better solutions.

Key Components of Genetic Algorithms:

- Population – A group of candidate solutions to the problem.

- Chromosomes – Representation of each solution (often encoded as binary strings, real numbers, or other data structures).

- Genes – Elements within a chromosome that define solution parameters.

- Fitness Function – A metric that determines how good a solution is compared to others.

- Selection – Choosing the fittest individuals to reproduce.

- Crossover (Recombination) – Combining parts of two parent solutions to produce offspring.

- Mutation – Randomly altering some genes to maintain diversity and avoid premature convergence.

- Termination Condition – Stopping criteria such as reaching a maximum number of generations or achieving a desired fitness level.

Through iterative application of these steps, genetic algorithms mimic natural evolution, enabling solutions to evolve toward optimal or near-optimal outcomes. In practice, AI models leveraging such approaches can achieve up to 99% accuracy in detecting fraudulent transactions.

How Genetic Algorithms Work in Machine Learning

Genetic Algorithms (GAs) are a class of search and optimization algorithms inspired by the principles of natural selection and evolution, commonly used in machine learning to address complex problems. Here’s a step-by-step breakdown of the process:

1. Initialization

The first step is to generate an initial population of candidate solutions. Each solution is typically represented as a chromosome (a string of parameters, often binary, but it can also be real numbers). The population can be created randomly, ensuring diversity, or by applying heuristics to give the algorithm a better starting point. A diverse initial population is important because it increases the chances of covering a wide range of possible solutions.

2. Fitness Evaluation

Once the population is initialized, each candidate solution is evaluated using a fitness function. This function measures how good the solution is with respect to the problem at hand. For example, in machine learning, the fitness function might be the accuracy of a model, the error rate, or another performance metric. The fitness score essentially determines the likelihood of a solution being chosen for the next generation.

3. Selection

After fitness evaluation, the algorithm moves on to selecting the fittest individuals for reproduction. The idea is to allow stronger candidates (those with higher fitness) a better chance of passing on their traits to the next generation. Common selection techniques include:

- Roulette Wheel Selection: Probability of selection is proportional to the fitness score.

- Tournament Selection: Randomly select a group of solutions and choose the best among them.

- Rank-Based Selection: Assign probabilities based on ranks instead of raw fitness values.

This step ensures that high-quality solutions are preserved while maintaining diversity.

4. Crossover (Recombination)

In this stage, two selected parent solutions are combined to create offspring. This process, inspired by biological reproduction, allows new solutions to inherit traits from both parents. Different crossover strategies exist, such as:

- Single-Point Crossover: Swap parts of two chromosomes at a single crossover point.

- Multi-Point Crossover: Exchange segments at multiple points.

- Uniform Crossover: Each gene of the offspring is randomly chosen from one of the parents.

Crossover is the main driver of exploration, as it mixes genetic material and introduces new candidate solutions.

5. Mutation

To avoid premature convergence and ensure diversity, mutation introduces small random changes in offspring. For instance, a binary 0 might flip to 1, or a parameter value might be slightly adjusted. Mutation prevents the population from becoming too similar and stuck in local optima, enabling exploration of new regions of the solution space.

6. Replacement

Once new offspring are generated, the next step is forming a new generation. The offspring typically replace less-fit solutions in the current population. Strategies vary, some algorithms replace the entire population, while others keep the top-performing individuals (elitism) to ensure the best solutions are preserved.

7. Iteration (Repeat the Process)

The cycle of evaluation, selection, crossover, and mutation is repeated over multiple generations. This iterative process allows solutions to evolve toward optimal or near-optimal states. The algorithm continues until a stopping criterion is met, such as reaching a maximum number of generations, achieving a predefined accuracy level, or when improvements between generations become negligible.

Refer these below articles:

- What is Transfer Learning in Machine Learning?

- How to Learn Machine Learning from Scratch?

- Top 5 AI Tools for Beginners to Get Started with Machine Learning

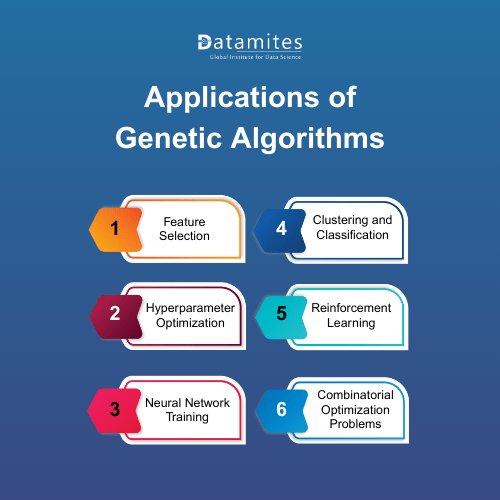

Applications of Genetic Algorithms in Machine Learning

Genetic algorithms play a transformational role in machine learning by addressing optimization challenges across different stages of the ML pipeline.

1. Feature Selection

Choosing the right set of features is critical for model performance. GAs can search through all possible feature subsets and identify combinations that maximize accuracy while reducing dimensionality.

2. Hyperparameter Optimization

Hyperparameters such as learning rate, number of hidden layers, and kernel parameters significantly impact model performance. GAs automate hyperparameter tuning, eliminating the need for manual trial and error.

3. Neural Network Training

Instead of traditional gradient descent, GAs can optimize the weights of neural networks. This is especially useful when the loss function is non-differentiable or when the error surface has many local minima.

4. Clustering and Classification

GAs help in clustering tasks by optimizing cluster centers or improving classification rules for complex datasets.

5. Reinforcement Learning

In reinforcement learning, GAs are used to evolve policies for agents in environments where gradient-based learning is inefficient or infeasible.

6. Combinatorial Optimization Problems

Tasks like scheduling, resource allocation, and pathfinding in graphs benefit from GA’s ability to explore vast solution spaces efficiently.

By leveraging genetic algorithms, machine learning practitioners can enhance model robustness, minimize overfitting, and achieve stronger generalization across diverse datasets. According to ABI Research, the global Artificial Intelligence (AI) software market was valued at approximately USD 122 billion in 2024 and is projected to grow at a compound annual growth rate (CAGR) of 25%, reaching nearly USD 467 billion by 2030.

Genetic Algorithms vs Other Optimization Techniques

While genetic algorithms are powerful, they are not the only optimization method available in machine learning. Here’s how GAs compare with other popular techniques:

1. Genetic Algorithms vs Gradient Descent

- Gradient descent is efficient for differentiable functions but struggles with complex landscapes and local minima.

- GAs, being derivative-free, handle non-differentiable, discontinuous, or highly rugged search spaces more effectively.

2. Genetic Algorithms vs Grid/Random Search

- Grid search systematically explores parameter space but is computationally expensive.

- Random search is faster but may miss optimal solutions.

- GAs balance exploration and exploitation, often achieving better results in fewer evaluations.

3. Genetic Algorithms vs Particle Swarm Optimization (PSO)

- PSO uses swarm intelligence, focusing on collective behavior, while GAs rely on crossover and mutation for diversity.

- GAs generally provide better global exploration, whereas PSO often converges faster.

4. Genetic Algorithms vs Simulated Annealing

- Simulated Annealing explores solution space using probabilistic acceptance of worse solutions.

- GAs maintain a population of solutions, which often results in greater diversity and adaptability.

Read these below articles:

Case Studies and Real-World Examples of Genetic Algorithm

To illustrate the effectiveness of genetic algorithms in machine learning, let’s look at a few real-world applications:

1. Stock Market Prediction

Genetic algorithms have been widely adopted in financial forecasting and algorithmic trading. GA-based models improved portfolio returns by 12–15% compared to traditional optimization methods. Similarly, in algorithmic trading, GAs have been shown to increase prediction accuracy of stock price movements by up to 20% when combined with machine learning classifiers.

2. Medical Diagnosis

In healthcare, GAs play a crucial role in disease prediction and treatment optimization. GA-based feature selection improved the accuracy of cancer classification models by 8–12% compared to conventional techniques. In cardiology, genetic algorithms have been used to design diagnostic models that achieved up to 95% accuracy in detecting heart disorders, outperforming baseline models by a significant margin.

3. Robotics

Robotics research often uses GAs for navigation and adaptive learning. For instance, experiments conducted at MIT showed that robots trained using GA-optimized strategies were able to reduce navigation errors by 30% compared to rule-based systems. In evolutionary robotics, GAs helped develop motion control systems that improved task efficiency by 25% in dynamic and uncertain environments.

4. Natural Language Processing (NLP)

Genetic algorithms are increasingly being applied to text mining and classification tasks. GA-based feature selection improved text classification accuracy by 10–15% compared to standard dimensionality reduction methods. In large-scale search systems, GAs helped optimize keyword extraction, leading to a 20% boost in search relevance and user satisfaction.

5. Engineering and Design Optimization

Engineering fields have benefited enormously from GAs for structural design and resource optimization. For example, in aerospace engineering, GA-based optimization reduced fuel consumption in aircraft design by nearly 6%, a figure highly significant at industry scale. Similarly, GA-driven circuit design processes demonstrated 20–30% faster optimization times compared to gradient-based approaches.

Genetic algorithms are a powerful tool in the machine learning toolkit. Inspired by natural evolution, they provide a way to solve complex optimization problems where traditional methods often fail. From feature selection and hyperparameter tuning to neural network design and reinforcement learning, their applications are vast and impactful. For learners and professionals looking to master such advanced concepts, a machine learning course in bangalore can provide the right exposure to practical case studies and real-world projects that highlight how genetic algorithms are applied in industry.

Bangalore, known as the ‘Silicon Valley of India,’ is emerging as a global hub for artificial intelligence. Driven by its strong IT workforce, research institutions, and startup culture, the city is leading AI adoption. Thousands of professionals are also upskilling through specialized Artificial Intelligence Courses in Bangalore.

DataMites Artificial Intelligence Institute in Bangalore stands out as a top choice for aspiring AI professionals. With its well-structured curriculum, globally recognized certifications, practical projects, flexible learning options, and strong placement assistance, Datamites institute equips learners with the skills needed to thrive in the AI industry.