Hyperparameter Tuning: How to Improve Your Machine Learning Model

Hyperparameter tuning is a critical process in optimizing machine learning and deep learning models for better performance.

Have you ever built a machine learning model that almost performed perfectly, but just couldn't quite hit the mark? The issue might not be your data or even your algorithm. The secret sauce to unlocking peak model performance often lies in something many overlook hyperparameter tuning. Just like adjusting the settings on a camera to capture the perfect shot, fine-tuning your model’s hyperparameters can dramatically improve its accuracy, efficiency, and ability to generalize. In this post, we’ll dive into the art and science of hyperparameter tuning and show you how to take your models from good to exceptional.

What Are Hyperparameters?

In machine learning, hyperparameters are the external configurations that you set before training a model, which controls how the learning process unfolds. Unlike parameters (like weights in a neural network) that are learned from data during training, hyperparameters are set manually and remain constant throughout the training process unless explicitly changed.

Common Hyperparameter Tuning Techniques

In this rapidly advancing field, selecting the right hyperparameter tuning method can be the key to transforming a mediocre model into a high-performing one. Below are some of the most widely used techniques for hyperparameter tuning:

1. Grid Search

Grid search is the most straightforward method. It exhaustively searches through a specified subset of hyperparameters.

- Pros: Simple and comprehensive

- Cons: Computationally expensive, especially with many parameters

2. Random Search

Instead of searching every combination, random search picks random combinations of hyperparameters.

- Pros: Faster and often just as effective as grid search

- Cons: May miss the optimal settings

3. Bayesian Optimization

Bayesian optimization builds a probabilistic model to predict which combination of hyperparameters is likely to yield the best results.

- Popular tools: Hyperopt, Optuna, Spearmint

- Pros: More efficient than grid or random search

- Cons: Requires more setup and understanding

4. Gradient-based Optimization

Used in deep learning frameworks where hyperparameters like learning rate or momentum can be adjusted via gradient information.

- Pros: Can converge quickly to optimal values

- Cons: Limited to continuous hyperparameters

5. Automated Machine Learning (AutoML)

AutoML platforms like Google AutoML, H2O.ai, or Auto-sklearn automate the hyperparameter tuning process.

- Pros: User-friendly, saves time

- Cons: May lack customization for advanced users

Refer these below articles:

- How to Learn Machine Learning from Scratch?

- Data Analytics in the Age of Big Data & Machine Learning

- Understanding Machine Learning: Basics for Beginners

Best Practices for Hyperparameter Tuning

Hyperparameter tuning can significantly enhance a machine learning model's performance, but without a clear strategy, it can also become resource-intensive and inefficient. Here are some best practices to follow for effective hyperparameter tuning:

- Use Cross-Validation: Always validate model performance using k-fold cross-validation to ensure stability and reliability.

- Start with Defaults: Begin with standard settings to get a baseline, then fine-tune one hyperparameter at a time.

- Limit the Search Space: Narrow down the ranges of hyperparameters based on prior knowledge or experiments.

- Monitor Overfitting: Use early stopping or regularization techniques to prevent the model from memorizing the training data.

- Use Learning Curves: Plot training and validation error to visually inspect how the model is learning.

- Track Experiments: Use tools like MLflow or Weights & Biases to keep records of your tuning experiments and results.

By implementing these best practices, you can streamline your hyperparameter tuning process, making it more systematic, efficient, and impactful, ultimately resulting in more accurate and reliable machine learning models. According to Markets and Markets, the global AI chatbot market is projected to grow at a CAGR of 23.3% from 2023 to 2028, highlighting the rising demand for intelligent and optimized AI systems.

Read these below articles:

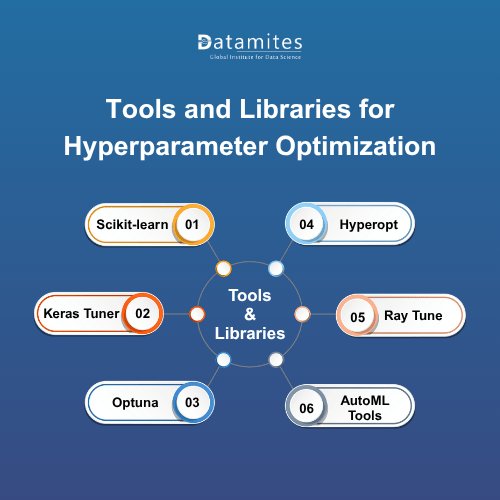

Tools and Libraries for Hyperparameter Optimization

Hyperparameter tuning can be time-consuming and computationally intensive, but several powerful tools and libraries are available to simplify and automate the process. These tools help streamline hyperparameter optimization, improve model performance, and accelerate development workflows.

1. Scikit-learn:

- GridSearchCV, RandomizedSearchCV

- Easy to use for small and medium datasets

2. Keras Tuner:

- Designed for deep learning models using TensorFlow/Keras

3. Optuna:

- Modern optimization library with support for pruning and advanced search

4. Hyperopt:

- Powerful and flexible library for Bayesian optimization

5. Ray Tune:

- Distributed hyperparameter tuning for large-scale projects

6. AutoML Tools:

- Google AutoML, H2O.ai, Auto-sklearn

- Useful for automated pipeline tuning

Challenges and Pitfalls in Hyperparameter Tuning

While hyperparameter tuning is essential for optimizing machine learning models, it comes with several challenges and pitfalls that can hinder performance if not carefully managed. Understanding these issues can help practitioners avoid common mistakes and make smarter optimization decisions.

1. High Computational Cost

- Problem: Tuning can be extremely resource-intensive, especially with large datasets or complex models like deep neural networks.

- Impact: Long training times, high memory usage, and increased cloud costs.

- Solution: Use efficient search strategies like Random Search, Bayesian Optimization, or early stopping to reduce unnecessary computations.

2. Curse of Dimensionality

- Problem: As the number of hyperparameters increases, the search space grows exponentially.

- Impact: It becomes harder to find the optimal combination, and the tuning process may miss better-performing configurations.

- Solution: Start with a smaller, relevant subset of hyperparameters and use domain knowledge to constrain the search space.

3. Overfitting to the Validation Set

- Problem: Excessive tuning may lead the model to perform well on the validation data but poorly on unseen data.

- Impact: Reduced generalization and unreliable real-world performance.

- Solution: Use cross-validation and maintain a separate test set that remains untouched during tuning.

4. Improper Hyperparameter Ranges

- Problem: Choosing hyperparameter ranges that are too narrow or unrealistic can limit optimization.

- Impact: Misses better combinations and leads to suboptimal models.

- Solution: Perform initial exploratory runs to identify promising value ranges, and use log scales for parameters like learning rate or regularization strength.

5. Non-Deterministic Results

- Problem: Some models or environments introduce randomness in training, leading to different outcomes with the same hyperparameters.

- Impact: Inconsistent results and misleading conclusions.

- Solution: Set random seeds and run experiments multiple times to ensure stability.

Hyperparameter tuning is a crucial step in developing high-performing machine learning models. Whether you're using a basic logistic regression model or a complex deep learning neural network, selecting the right combination of hyperparameters can greatly improve accuracy, efficiency, and generalization. In fact, with proper tuning and optimization, AI and ML algorithms are now capable of detecting fraudulent transactions with up to 99% accuracy.

Hyperparameter tuning is a core skill taught in artificial intelligence courses to enhance the performance of machine learning and deep learning models. In an artificial intelligence course in Pune, students explore the distinction between parameters and hyperparameters and gain hands-on experience in optimizing models for improved accuracy, efficiency, and generalization.

DataMites Artificial Intelligence Institute in Hyderabad offers a strong foundation for those aiming to build a career in AI. With expert-led instruction, hands-on projects, and dedicated placement support, Datamites goes beyond theory to equip learners with practical skills and confidence. The program is accredited by renowned organizations such as IABAC and NASSCOM FutureSkills, adding industry recognition to your credentials.