What is a Convolutional Neural Network?

A Convolutional Neural Network (CNN) is a specialized type of deep learning model designed primarily for processing and analyzing visual data such as images and videos.

Artificial Intelligence (AI) is transforming how machines see, understand, and interpret the world around them. One of the most powerful tools behind modern computer vision applications is the Convolutional Neural Network (CNN). From detecting faces in photos to powering autonomous vehicles, CNNs have revolutionized how computers process images and visual data. But what exactly is a CNN, how does it work, and why is it so effective? Let’s explore.

What is a Convolutional Neural Network?

A Convolutional Neural Network is a type of deep neural network specifically designed to handle grid-like data, such as images represented in pixel grids. Unlike traditional neural networks, which require manual feature extraction, CNNs automatically detect important features like edges, textures, and shapes through a process called convolution.

For example:

- In facial recognition, a CNN can detect facial features like eyes, nose, and mouth.

- In self-driving cars, it can identify traffic signs, pedestrians, and road lanes.

By learning hierarchical patterns from simple edges in early layers to complex shapes in deeper layers CNNs make image classification and object detection more efficient and accurate.

Refer these below articles:

- What is Artificial General Intelligence (AGI)?

- Human Intelligence vs Artificial Intelligence: Key Differences and Insights

- What is Semantic Networks in Artificial Intelligence

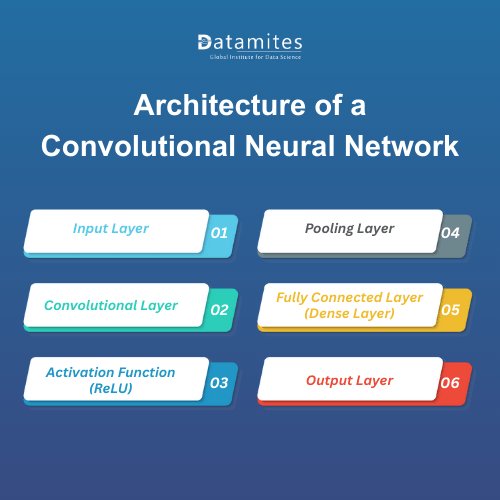

Architecture of a Convolutional Neural Network

The architecture of a CNN consists of multiple layers that work together to extract and learn features from input data. The common components include:

- Input Layer: Accepts image data in the form of pixel values, typically represented as height × width × channels (e.g., 224×224×3 for RGB images).

- Convolutional Layer: Applies filters (kernels) that slide over the image to detect features such as edges, colors, or patterns.

- Activation Function (ReLU): Introduces non-linearity to the network, allowing it to learn complex patterns.

- Pooling Layer: Reduces the spatial size of the feature maps (e.g., Max Pooling) to decrease computational load and control overfitting.

- Fully Connected Layer (Dense Layer): Combines features learned from previous layers to make predictions.

- Output Layer: Produces the final prediction, often using a Softmax activation for classification tasks.

Key Concepts in Convolutional Neural Network

To build a strong understanding of Convolutional Neural Networks, it’s crucial to know these core concepts that define how CNNs detect and learn patterns in data:

- Filters (Kernels): Filters are small, trainable matrices that move across an image to detect patterns such as edges, corners, textures, or even complex shapes in later layers. For example, in the first layer, a filter might detect vertical edges in a photograph, while deeper layers could recognize objects like eyes, wheels, or text.

- Stride: The stride determines how many pixels the filter shifts after each operation. A stride of 1 captures fine details by scanning every pixel, while a stride of 2 or more reduces the output size and speeds up computation often used when working with large images.

- Padding: Padding involves adding extra pixels (usually zeros) around the image before convolution. This helps control the spatial size of the output. For instance, “same padding” keeps the output dimensions equal to the input size, preserving edge information, while “valid padding” reduces the image size but avoids artificial pixels.

- Feature Maps: A feature map is the result after a filter has been applied to an image. It highlights the presence of specific features in particular locations. For example, if a filter is trained to detect horizontal lines, its feature map will show strong activations where such lines exist in the image.

- Receptive Field: The receptive field refers to the region of the input image that influences a single neuron’s output in a CNN layer. As we move deeper into the network, receptive fields grow larger, allowing neurons to detect more complex and abstract features, such as shapes, objects, or even scenes.

According to Markets and Markets The Chatbot Market size was valued at USD 5.4 billion in 2023 and is expected to grow at a CAGR of 23.3% from 2023 to 2023. The revenue forecast for 2028 is projected to reach $15.5 billion.

How Convolutional Neural Network Learn

Convolutional Neural Networks learn by combining two core techniques: backpropagation and gradient descent optimization. This allows them to automatically improve their ability to detect and classify patterns over time.

Here’s the step-by-step process:

- Forward Pass: The input image moves through the CNN’s layers, generating an output (prediction).

- Loss Calculation: A loss function (e.g., cross-entropy loss) measures how far the prediction is from the actual label.

- Backpropagation: The network computes gradients how much each filter’s weights contributed to the error by propagating the loss backward through the layers.

- Weight Update: Using gradient descent, the network adjusts the filter weights to reduce the loss, moving closer to the correct prediction.

- Iterative Training: This process repeats over many epochs, with the CNN gradually improving its accuracy on the training data.

The main advantage of CNNs lies in their ability to automatically learn features. They can identify the most important patterns ranging from basic edges to intricate object structures without relying on manual feature engineering. With global AI investments expected to reach $200 billion by 2025, much of the focus will be on AI model innovation, supporting infrastructure, and advanced application software.

Recent Advancements in Convolutional Neural Network

The landscape of Convolutional Neural Networks (CNNs) continues to evolve rapidly, with exciting innovations emerging in 2024 and 2025. These advancements are powering smarter, faster, and more accurate AI systems across industries:

- Capsule-ConvKAN: A novel hybrid model designed for medical image classification, Capsule-ConvKAN merges the spatial hierarchy learning of Capsule Networks with the interpretability of the Convolutional Kolmogorov-Arnold Network (ConvKAN). In histopathological image tests, it achieved a remarkable 91.21% accuracy, outperforming traditional CNNs in challenging biomedical data scenarios.

- Edge Attention Module (EAM) for CNNs: Researchers introduced an Edge Attention Module, incorporating a Max-Min pooling layer to prioritize edge features crucial for object classification. When integrated into popular CNNs, this module surpassed recent models such as Pooling-based ViT (PiT), CBAM, and ConvNeXt achieving up to 95.5% accuracy on Caltech-101 and 86% on Caltech-256 datasets.

- KAN-Mixers: Building on the MLP-Mixer and ConvKAN ideas, KAN-Mixers leverage Kolmogorov-Arnold Network (KAN) architectures in a mixer-style framework. They outperformed MLP, MLP-Mixer, and KAN alone on datasets like Fashion-MNIST and CIFAR-10, demonstrating the power of hybrid model design.

- MobileNet-V4: The latest iteration of the MobileNet family, MobileNet-V4 introduces a Universal Inverted Bottleneck and multi-query attention, blending efficient convolution with transformer-inspired mechanisms offering high performance while remaining lightweight for mobile and edge applications.

- Hybrid CNN–Transformer Architectures: Hybrid designs like Next-ViT combine convolutional blocks with transformer modules, delivering superior accuracy-latency trade-offs for tasks such as object detection and segmentation. Next-ViT notably outperformed ResNets and other hybrid models on COCO and ADE20K datasets under similar latency constraints.

Read these below articles:

A Convolutional Neural Network is a groundbreaking deep learning architecture that has revolutionized how machines understand and process visual information. With its ability to automatically extract features, reduce computational complexity, and deliver high accuracy, CNNs have become a cornerstone of AI applications across industries. From image classification and object detection to medical diagnosis and self-driving technology, CNNs continue to push the boundaries of what machines can achieve in visual recognition.

Hyderabad has quickly established itself as one of India’s leading technology hubs, fostering innovation, thriving IT services, and a vibrant startup ecosystem. As artificial intelligence gains momentum in sectors like healthcare, finance, retail, and telecommunications, the city is witnessing a growing need for qualified AI professionals. Artificial Intelligence institute in Hyderabad provides learners with cutting-edge skills, hands-on experience, and industry-relevant expertise to excel in this fast-evolving field.

DataMites is a prominent training provider in the fields of Artificial Intelligence, Machine Learning, Data Science, and other emerging technologies. Students benefit from internationally recognized certifications accredited by IABAC and NASSCOM FutureSkills, along with extensive career support that covers resume preparation, mock interview sessions, and strong ties with industry networks. DataMites Artificial Intelligence Institute in Bangalore has training centers in Kudlu Gate, BTM Layout, and Marathahalli, offering both online and classroom-based learning to cater to different learning preferences.