What is Transfer Learning in Machine Learning?

Discover what transfer learning in machine learning is, how it works, and its applications in AI. Learn how pre-trained models can save time and improve accuracy in your ML projects.

Machine learning has revolutionized how industries solve problems, from healthcare to finance. However, training machine learning models from scratch often requires massive amounts of labeled data and computational resources. This challenge led to the rise of transfer learning, a powerful technique that allows models to leverage pre-existing knowledge from previously trained models. Transfer learning not only saves time but also improves accuracy, making it one of the most widely adopted approaches in modern artificial intelligence.

Definition of Transfer Learning

Transfer learning in machine learning is the process of reusing a pre-trained model on a new but related task. Instead of starting from zero, the model benefits from prior learning, such as patterns, features, or language understanding, gained during training on large datasets. For example, a model trained on millions of images can be adapted to classify medical images with minimal additional training.

This method differs from traditional machine learning, where each new task requires training a fresh model from scratch. According to Grand View Research, the global artificial intelligence market was valued at USD 279.22 billion in 2024 and is expected to reach USD 1,811.74 billion by 2030, growing at a CAGR of 35.9% between 2025 and 2030.

Why Transfer Learning is Important

In the world of machine learning and artificial intelligence, one of the biggest challenges is the scarcity of large, high-quality datasets and the massive computational power required to train models from scratch. This is where transfer learning plays a transformative role, making AI more practical and widely applicable. Below are the key reasons why transfer learning is so important:

1. Saves Time and Resources

Training deep learning models like CNNs (Convolutional Neural Networks) or transformer-based models from the ground up often requires weeks of computation, large datasets, and high-end GPUs. With transfer learning, developers can reuse pre-trained models and fine-tune them for their own tasks. This not only accelerates development but also reduces infrastructure costs, making AI solutions more accessible for startups, researchers, and enterprises.

2. Works Well with Limited Data

In many industries, obtaining large labeled datasets is extremely difficult, time-consuming, and expensive. For example, labeling thousands of medical images requires domain expertise from radiologists. Transfer learning addresses this issue by using knowledge gained from a larger dataset (like ImageNet for images or large text corpora for NLP) and applying it to a smaller dataset. This allows high-performing models to be built even with limited data availability.

3. Boosts Performance and Accuracy

By leveraging prior knowledge, transfer learning often produces more accurate results than models trained from scratch on small datasets. For instance, in natural language processing (NLP), models like BERT, GPT, and RoBERTa achieve state-of-the-art performance across multiple tasks because they are fine-tuned from pre-trained language models. Similarly, in computer vision, transfer learning significantly improves results in tasks like object detection, face recognition, and medical diagnosis.

4. Real-World Relevance Across Industries

Transfer learning is highly relevant in industries where labeled data is scarce or expensive to obtain. For example:

- Healthcare: Identifying rare diseases using a limited number of diagnostic images is a powerful application of AI. Today, nearly 60% of healthcare organizations have already incorporated AI solutions into their operations.

- Finance: In fraud detection, where fraudulent cases are far fewer than legitimate ones, ML algorithms have proven highly effective, achieving up to 99% accuracy in identifying suspicious transactions.

- Retail and E-commerce: Personalized product recommendations with limited behavioral data.

- Autonomous Vehicles: Adapting models trained in one driving environment, such as urban streets, to perform effectively in new conditions like highways or rural areas is a key use of transfer learning. By 2030, it is projected that one out of every ten vehicles on the road will be autonomous.

Refer these below articles:

- How to Learn Machine Learning from Scratch?

- Supervised vs. Unsupervised Learning: What’s the Difference?

- Getting Started with Machine Learning: A Beginner’s Guide

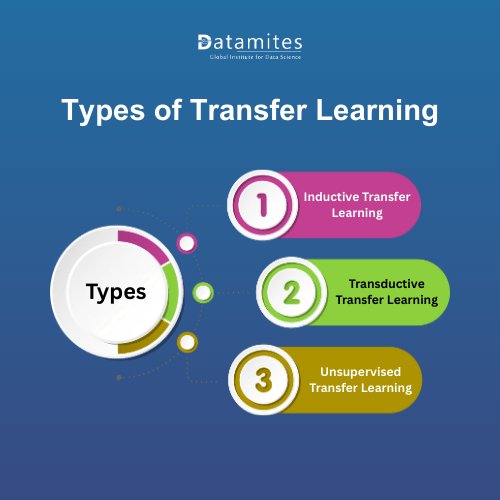

Types of Transfer Learning

There are different types of transfer learning depending on the relationship between tasks and domains:

Inductive Transfer Learning

- The tasks are different, but the domains remain the same.

- Example: Using a model trained to recognize animals and adapting it to recognize vehicles.

Transductive Transfer Learning

- The tasks are the same, but the domains differ.

- Example: A sentiment analysis model trained on English reviews being applied to French reviews.

Unsupervised Transfer Learning

- Applied in cases without labeled data, where the knowledge from one unsupervised task aids another.

- Example: Using word embeddings trained on one large dataset to enhance clustering tasks on another.

How Transfer Learning Works

The essence of transfer learning lies in the idea that knowledge gained while solving one problem can be reused to solve a different, but related, problem. Instead of starting with random weights, models benefit from pre-trained weights that already encode useful patterns like shapes, colors, language structures, or audio features. This drastically reduces the amount of training data and computation needed.

Here’s a deeper look at the main techniques involved:

1. Pre-Trained Models

Pre-trained models are the foundation of transfer learning. These are models that have been trained on massive datasets and are made publicly available for reuse.

- Computer Vision: Models like ResNet, VGG, and Inception are trained on ImageNet, which contains millions of images across thousands of categories.

- Natural Language Processing (NLP): Models such as BERT, GPT, and RoBERTa are trained on large text corpora and can be fine-tuned for tasks like text classification or summarization.

- Speech Recognition: Pre-trained models can recognize general speech patterns and then be adapted for specific accents or languages.

Using pre-trained models is like hiring an expert who already knows the basics. You only need to teach them the specifics of your new problem.

2. Feature Extraction

In feature extraction, the pre-trained model is used as a fixed feature generator. The model’s earlier layers extract general features such as edges, textures, or shapes (in images), or grammar and semantic structures (in text).

- These features are then fed into a new classifier (like logistic regression or a fully connected neural network) to solve the specific task.

- Example: Using a CNN trained on ImageNet to extract features from MRI scans, and then adding a small neural network to classify the presence of tumors.

This method works best when the new dataset is small and somewhat similar to the dataset used for pre-training.

3. Fine-Tuning

Fine-tuning takes feature extraction one step further. Instead of keeping the pre-trained layers fixed, you retrain some or all layers of the model on your new dataset.

- Early layers capture very generic features (like edges), while later layers capture more task-specific patterns.

- By fine-tuning, the model adjusts its knowledge to fit the new problem more closely.

- Example: Starting with BERT trained on Wikipedia and fine-tuning it for a sentiment analysis task in the financial domain.

Fine-tuning often requires more computation than feature extraction but leads to higher accuracy on specialized tasks.

4. Freezing Layers

Another common strategy is layer freezing, where the lower layers of the network are kept fixed (since they capture general knowledge), and only the top layers are retrained.

- This prevents overfitting, especially when the new dataset is small.

- Example: In image recognition, the first few layers that identify edges and shapes can remain unchanged, while the final classification layers are trained to recognize specific objects like defective products on a factory line.

5. Practical Example in Action

Imagine a company wants to build an AI system to detect defects in manufactured products using images:

- Training from scratch would require tens of thousands of labeled defect images, which are expensive to collect.

- Instead, they can use a ResNet model pre-trained on ImageNet.

- They may freeze the initial layers (since general visual features are already captured) and fine-tune the last layers with a few thousand defect images.

- The result: a high-performing model trained in a fraction of the time, with much less data.

Read these below articles:

Applications of Transfer Learning

Transfer learning has become a cornerstone of modern AI, powering practical solutions across industries and technologies. Some notable applications include:

1. Natural Language Processing (NLP)

Pre-trained language models such as BERT (by Google) and GPT (by OpenAI) are trained on massive text corpora and fine-tuned for specific tasks. For example:

- BERT has been widely used by Google Search to better understand user queries.

- GPT-based models power advanced chatbots like ChatGPT, capable of conversational AI, text summarization, and content generation.

2. Computer Vision

Transfer learning is heavily used in image recognition and object detection:

- ResNet and VGG models, originally trained on ImageNet, are adapted for tasks like facial recognition in Facebook’s DeepFace system.

- In healthcare, DeepMind’s AI uses transfer learning to analyze eye scans, helping doctors detect conditions such as diabetic retinopathy and macular degeneration.

3. Speech Recognition

- Pre-trained models such as Wav2Vec 2.0 (by Facebook AI) have been fine-tuned for voice assistants and transcription tools.

- Google Assistant and Amazon Alexa apply transfer learning to recognize different accents, dialects, and new languages, improving their global usability.

4. Healthcare

Transfer learning enables AI to work with small datasets of medical images:

- IBM Watson Health applies transfer learning to detect diseases in X-rays and MRIs.

- Stanford’s CheXNet model, trained on chest X-rays, helps identify pneumonia with accuracy comparable to radiologists.

5. Finance

In financial services, transfer learning strengthens fraud detection and risk analysis:

- PayPal uses AI models to detect fraudulent transactions, improving accuracy even when fraudulent cases are rare compared to legitimate ones.

- Banks leverage transfer learning for credit risk scoring and stock market prediction based on time-series data.

Transfer learning is reshaping how machine learning models are developed and applied. By leveraging knowledge from pre-trained models, it effectively tackles challenges such as limited datasets, lengthy training times, and high computational costs. With the rapid growth of AI and the emergence of foundation models and few-shot learning, transfer learning is set to remain a key driver of innovation. For anyone aiming to build a strong career in artificial intelligence, mastering this technique is essential. Taking an artificial intelligence course in hyderabad can provide the right foundation to understand transfer learning in depth and apply it confidently to real-world problems.

DataMites offers comprehensive Artificial Intelligence training designed to be both beginner-friendly and career-focused. It is ideal for fresh graduates as well as experienced professionals. For learners in areas like Kudlu Gate, BTM Layout, Marathahalli, and nearby localities, the Artificial Intelligence training institute in Bangalore ensures flexible learning schedules, expert mentorship, and strong placement support helping individuals launch or advance their careers in AI with confidence.