Supervised vs. Unsupervised Learning: What’s the Difference?

Discover the key differences between supervised and unsupervised learning in machine learning. Understand how each method works, their real-world applications, and when to use them. Perfect for beginners exploring the world of AI and data science.

Machine learning is a subset of artificial intelligence (AI) that enables systems to learn and improve from experience without being explicitly programmed. By using algorithms to analyze data, machine learning models identify patterns, make decisions, and generate predictions. This field has gained significant traction in industries ranging from healthcare to finance due to its ability to automate processes and deliver accurate, data-driven insights.

Machine learning is broadly categorized into three types: supervised learning, unsupervised learning, and reinforcement learning. Among these, supervised and unsupervised learning are the most foundational, forming the bedrock of countless AI applications. Understanding the differences between them is crucial for selecting the right algorithm and ensuring the success of your data science projects. The United States has the largest machine learning market worldwide in 2024, with a value of over 21 billion U.S. dollars. India, despite its large population, is still only comparable to the large European nations in market size.

What is Supervised Learning?

Supervised learning is a widely used machine learning approach where an algorithm is trained on a labeled dataset. In this context, “labeled” means that each input data point is paired with the correct output or result. The goal of supervised learning is for the model to learn the relationship between the input features and the corresponding outputs so it can make accurate predictions on new, unseen data.

Role of Labeled Data in Supervised Learning

Labeled data is the cornerstone of supervised learning. It refers to datasets where each input is paired with a corresponding, correct output. This structured format allows the algorithm to learn by example, understanding the relationship between features (inputs) and targets (outputs).

Labeled data plays a crucial role in training machine learning models by allowing them to evaluate their predictions and make necessary adjustments to reduce errors. These labels serve as a reference, helping the model learn patterns, identify trends, and make accurate predictions on new, unseen data. The machine learning market is projected to reach a size of US$105.45 billion by 2025 according to Statista, reflecting its growing importance and widespread adoption across industries.

The quality and quantity of labeled data significantly impact the performance of a supervised learning model. High-quality labels lead to more accurate and reliable predictions, while noisy or incorrect labels can mislead the algorithm, resulting in poor model performance. Additionally, a larger dataset typically provides a more diverse range of examples, helping the model generalize better across various scenarios.

Refer these below articles:

- Understanding Machine Learning: Basics for Beginners

- The Importance of Feature Engineering in Machine Learning

- Getting Started with Machine Learning: A Beginner’s Guide

Examples of Supervised Learning Algorithms

Supervised learning encompasses a wide range of algorithms that are designed to learn from labeled data and make predictions or classifications. Below are some commonly used supervised learning algorithms:

- Linear Regression: Linear Regression is used for predicting continuous values. It models the relationship between one or more independent variables and a dependent variable by fitting a linear equation to the observed data. For example, it can be used to predict housing prices based on features like size, location, and number of bedrooms.

- Logistic Regression: Despite its name, Logistic Regression is used for classification problems. It estimates the probability of a binary outcome, such as whether an email is spam or not.

- Decision Trees: Decision Trees use a tree-like model of decisions and their possible consequences. They are easy to interpret and useful for both classification and regression tasks. The algorithm splits the data based on feature values to make predictions.

- Support Vector Machines (SVM): SVMs are powerful classifiers that work well in high-dimensional spaces. They find the optimal hyperplane that separates data points of different classes with maximum margin. SVMs are effective in applications like image recognition and bioinformatics.

- K-Nearest Neighbors (KNN): KNN is a simple yet effective algorithm that classifies data points based on the majority class among its ‘k’ nearest neighbors. It’s widely used in pattern recognition tasks.

- Random Forest: Random Forest is an ensemble method that builds multiple decision trees and merges them to get a more accurate and stable prediction. It handles both classification and regression problems effectively and reduces the risk of overfitting.

- Naive Bayes: Based on Bayes’ Theorem, this algorithm assumes independence between predictors. It is particularly effective for text classification problems such as spam filtering and sentiment analysis.

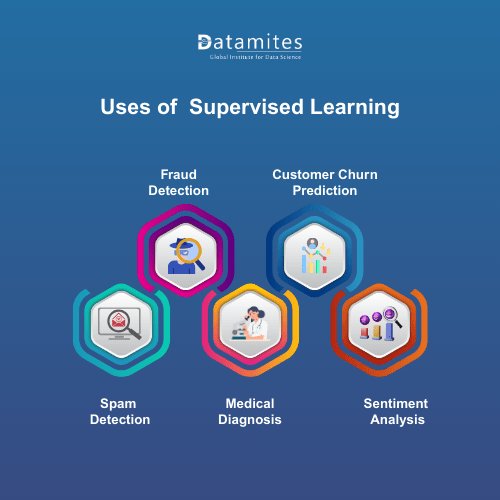

Real-World Use Cases of Supervised Learning

Supervised learning plays a critical role in solving real-world problems across various industries by leveraging labeled data to make accurate predictions and informed decisions. Below are some key use cases:

- Spam Detection: Email service providers use supervised learning algorithms like Naive Bayes and Support Vector Machines (SVM) to classify emails as spam or not spam. These models are trained on large datasets labeled with spam and non-spam emails to detect unwanted messages with high accuracy.

- Fraud Detection: In the banking and finance sector, supervised learning models such as Logistic Regression and Random Forests are employed to detect fraudulent transactions. These algorithms are trained on historical data labeled as “fraudulent” or “legitimate” to identify unusual patterns and prevent financial fraud.

- Medical Diagnosis: Supervised learning is widely used in the healthcare industry for disease prediction and diagnosis. Algorithms like Decision Trees and Support Vector Machines help predict conditions such as diabetes, heart disease, and cancer by analyzing patient records labeled with diagnosis results.

- Customer Churn Prediction: Businesses use supervised learning to forecast customer churn by training models on datasets labeled with customer behavior and whether they stayed or left. This helps companies develop targeted retention strategies.

- Sentiment Analysis: In marketing and social media monitoring, supervised learning is applied to analyze customer reviews, feedback, or social media posts. Algorithms are trained on text data labeled with sentiments (positive, negative, neutral) to assess public opinion and improve products or services.

What is Unsupervised Learning?

Unsupervised learning is a type of machine learning technique in which algorithms analyze and interpret datasets without any labeled outputs. Unlike supervised learning, where the model is trained on a dataset containing input-output pairs, unsupervised learning involves working with data that has no predefined labels or outcomes. The primary objective of unsupervised learning is to uncover hidden patterns, structures, or relationships within the data.

Role of Unlabeled Data in Unsupervised Learning

Unlabeled data plays a central and indispensable role in unsupervised learning. Unlike supervised learning, which relies on labeled datasets containing both input features and corresponding target values, unsupervised learning works solely with input data that has no predefined outcomes or categories. The algorithm's task is to explore the structure of this raw data and identify meaningful patterns, relationships, or groupings without any external guidance.

The abundance of unlabeled data in real-world scenarios such as images, text, and user behavior makes unsupervised learning especially valuable. Labeling data can be time-consuming, costly, or even impractical, making unsupervised learning a scalable solution for harnessing large datasets. By applying statistical and mathematical techniques, these models can cluster similar items, detect anomalies, and reduce dimensionality, uncovering hidden patterns and insights. This trend is expected to grow significantly, with the market projected to reach $209.91 billion by 2029, reflecting a remarkable compound annual growth rate (CAGR) of 38.8%, according to Fortune Business Insights.

Ultimately, unlabeled data serves as the foundation upon which unsupervised learning builds intelligent systems capable of discovering trends and making sense of complex information autonomously. This enables businesses and researchers to generate insights, segment markets, personalize experiences, and optimize operations—without the need for manually labeled training data.

Examples of Unsupervised Learning Algorithms

Unsupervised learning algorithms are designed to find hidden patterns or intrinsic structures in data without the use of labeled outcomes. Below are some of the most widely used unsupervised learning algorithms:

- K-Means Clustering: K-Means is a popular clustering algorithm that partitions data into a predetermined number of clusters (k) by minimizing the variance within each cluster. It is commonly used in market segmentation, customer grouping, and image compression.

- Hierarchical Clustering: This algorithm builds a hierarchy of clusters either in a bottom-up (agglomerative) or top-down (divisive) manner. It creates a tree-like structure (dendrogram), making it easier to visualize how data points are grouped at various levels of granularity.

- Principal Component Analysis (PCA): PCA is a dimensionality reduction technique used to simplify large datasets by transforming them into a smaller set of uncorrelated variables called principal components. It is widely applied in exploratory data analysis, visualization, and noise reduction.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): DBSCAN groups together closely packed points and identifies outliers as noise. It does not require specifying the number of clusters in advance and is effective at discovering clusters of arbitrary shapes.

- Autoencoders: Autoencoders are neural networks used to learn compressed representations of data. They are especially useful for anomaly detection, image reconstruction, and feature learning.

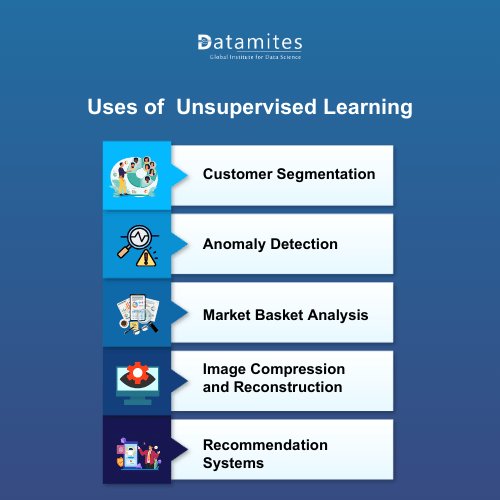

Real-World Use Cases of Unsupervised Learning

Unsupervised learning plays a vital role in discovering hidden patterns and insights in data without relying on labeled outcomes. It is widely applied across various industries to solve complex problems where manual labeling is impractical or unavailable. Here are some key real-world applications:

- Customer Segmentation: Businesses use unsupervised learning to segment their customer base into distinct groups based on purchasing behavior, demographics, or engagement levels. This helps tailor marketing strategies, personalize product recommendations, and improve customer experience.

- Anomaly Detection: In industries like finance and cybersecurity, unsupervised learning algorithms detect unusual patterns that may indicate fraud, network intrusions, or equipment failures. Since anomalies often differ significantly from normal behavior, unsupervised models can flag these deviations effectively.

- Market Basket Analysis: Retailers use clustering and association rule learning to identify which products are frequently bought together. This insight informs inventory management, store layout, and targeted promotions.

- Image Compression and Reconstruction: Techniques like Principal Component Analysis (PCA) and autoencoders are used in image processing to reduce file sizes and reconstruct images efficiently, helping in storage and transmission without losing key visual information.

- Recommendation Systems: Although often used alongside supervised methods, unsupervised learning helps in uncovering latent features in user preferences or product similarities to make personalized suggestions in platforms like Netflix, Amazon, or Spotify.

Differences Between Supervised and Unsupervised Learning

Supervised and unsupervised learning are two fundamental types of machine learning, each with its unique approach, objectives, and applications. Here are the key differences between them:

Data Labeling

- Supervised Learning relies on labeled data, meaning each input comes with a corresponding output. The model learns from these known pairs to make predictions on new data.

- Unsupervised Learning uses unlabeled data. The algorithm explores the underlying structure or patterns in the data without predefined labels.

Learning Objective

- In supervised learning, the goal is to predict outcomes or classify data based on historical examples.

- In unsupervised learning, the goal is to find hidden patterns, groupings, or associations within the data.

Common Algorithms

- Supervised algorithms include Linear Regression, Logistic Regression, Decision Trees, Support Vector Machines (SVM), and Neural Networks.

- Unsupervised algorithms include K-Means Clustering, Hierarchical Clustering, Principal Component Analysis (PCA), and Association Rules.

Output Type

- Supervised learning produces a specific output, such as a class label or a continuous value.

- Unsupervised learning does not produce a specific target output but reveals data structures like clusters or correlations.

Real-World Applications

- Supervised learning is commonly used in fraud detection, spam filtering, sentiment analysis, and medical diagnosis.

- Unsupervised learning is ideal for customer segmentation, anomaly detection, market basket analysis, and recommendation systems.

Model Evaluation

- Supervised models can be evaluated using accuracy, precision, recall, F1 score, and other metrics since true labels are available.

- Unsupervised models are harder to evaluate due to the absence of ground truth, often relying on metrics like silhouette score or visual inspection.

Pros and Cons of Supervised Learning and Unsupervised Learning

Supervised Learning: Supervised learning involves training a model on a labeled dataset, meaning the input data is paired with the correct output.

Pros:

- High Accuracy: With labeled data, models often achieve high performance on tasks like classification and regression.

- Predictable Output: You know what to expect from the model since it’s trained to predict a specific output.

- Clear Evaluation: Easy to measure performance using metrics like accuracy, precision, recall, etc.

- Real-world Applications: Widely used in spam detection, fraud detection, sentiment analysis, etc.

Cons:

- Data Labeling Required: Needs a large, labeled dataset which can be expensive and time-consuming.

- Less Adaptable: May struggle with unseen data or patterns that don’t fit the training set.

- Overfitting Risk: Can overfit to the training data if not regularized properly.

Unsupervised Learning: Unsupervised learning deals with unlabeled data. The model tries to find hidden patterns or intrinsic structures in the input data.

Pros:

- No Labeled Data Needed: Saves time and cost since manual labeling is not required.

- Discover Hidden Patterns: Good at uncovering unknown structures in the data, like clusters or associations.

- Useful for Preprocessing: Often used to reduce dimensionality (e.g., PCA) or to cluster data for further analysis.

Cons:

- Harder to Evaluate: No ground truth makes it tricky to assess the model’s performance.

- Less Control: Results can be unpredictable, and interpreting them may require domain expertise.

- May Detect Irrelevant Patterns: Might find patterns that are statistically valid but practically meaningless.

Read these below articles:

Supervised and unsupervised learning are foundational pillars of machine learning, each serving distinct purposes and offering unique advantages. Supervised learning is highly effective for prediction and classification tasks that require labeled data, while unsupervised learning excels at uncovering patterns and conducting exploratory data analysis using unlabeled data. According to Gartner, 37% of organizations have adopted AI in some capacity, and the number of enterprises implementing artificial intelligence has increased by 270% over the past four years, highlighting the rapid growth and adoption of AI technologies.

Whether you're just starting your machine learning journey or refining your skills, gaining hands-on experience with both learning types will prepare you to tackle real-world challenges with confidence and precision.

The DataMites Artificial Intelligence Course in Bangalore is a comprehensive and industry-aligned training program designed to equip learners with the in-demand skills required to excel in the field of AI. With a balanced blend of theoretical concepts and hands-on practice, this course is ideal for beginners, intermediate learners, and working professionals looking to transition into artificial intelligence roles.

One of the key highlights of DataMites is the opportunity it provides to work on real-world datasets and projects, closely simulating the challenges encountered in industry environments. This hands-on experience not only builds confidence but also equips learners to become job-ready with a strong, professional portfolio.

Available in both online and offline formats across key Indian cities such as Bangalore, Chennai, Hyderabad, Coimbatore, Mumbai, Ahmedabad, Kolkata, and more, the Artificial Intelligence Training in Pune ensures accessibility and flexibility for all types of learners. Whether you’re aiming to become an AI engineer, data scientist, or machine learning specialist, this course lays a strong foundation and provides a structured path toward your career goals.