Best Categorical Variable Encoding Techniques

What is Feature Encoding?

Feature Encoding is a technique from the Feature engineering or Data preprocessing pipeline. It is applied to categorical features to convert them into numerical equivalent, as the machine learning algorithm cannot understand the string type of data. There are many encoding techniques used for feature engineering, let’s explore some of them

- Label Encoding:

It is the encoding technique that works on the alphabets present in the label. It will assign male as 1 and female as 2 as in alphabet order f comes before m.

[male,female] [1,0]

[blue,orange,red] [0,1,2]

- Ordinal encoding:

Ordinal encoding is the technique to encode ordinal features i.e which follows a certain order.

[cold,warm,hot] [0,1,2]

[poor,fair,good,very good,excellent] [0,1,2,3,4]

[bearable pain,moderate pain,unbearable pain] [0,1,2]

- Frequency encoding:

Frequency encoding is an encoding technique to transform an original categorical variable to a numerical variable by considering the frequency distribution of the data getting value counts. It can be useful for nominal features. Nominal features don’t have any order

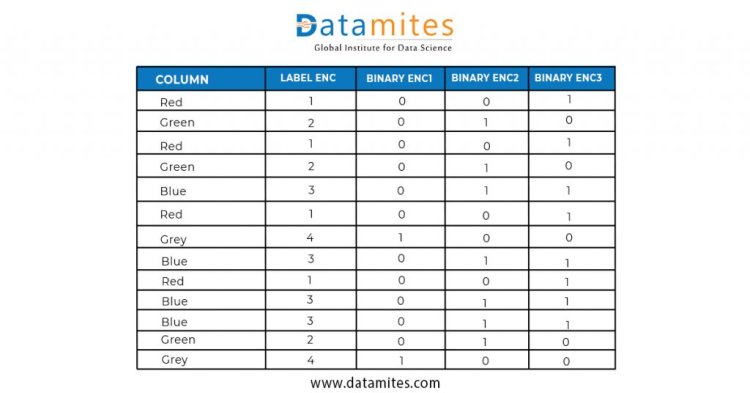

- Binary encoding:

It is an encoding technique which first converts the categorical data to numerical using label encoding and then employs one hot encoding on the label encoded feature.

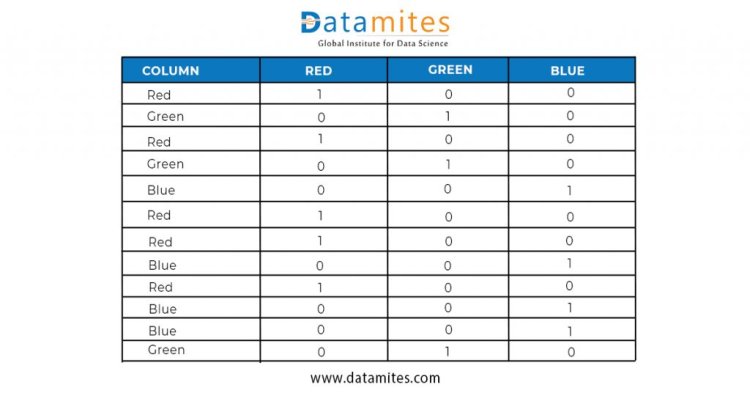

- One hot encoding:

One hot encoding technique creates new features using the labels which are

Present and wherever the label is present it will mark that feature as 1 and rest other features as 0.

These are generally used encoding techniques however based on data and domain we can have more ways to convert the categorical data.Do apply to your data and see the performance of each technique.

Keep Learning.

Datamites Team

Datamites Team