The Complete Guide to Recurrent Neural Network

Introduction

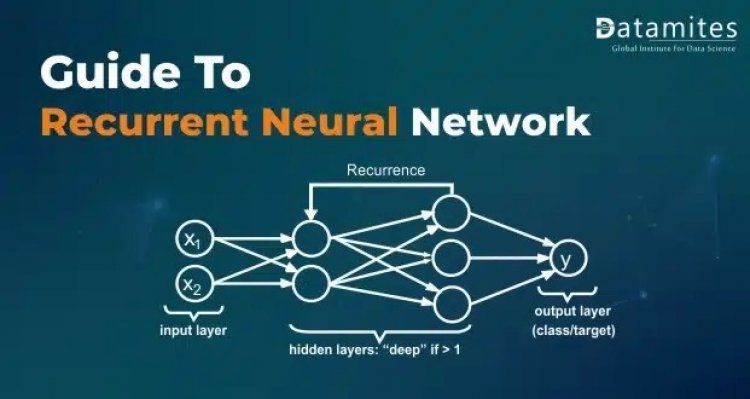

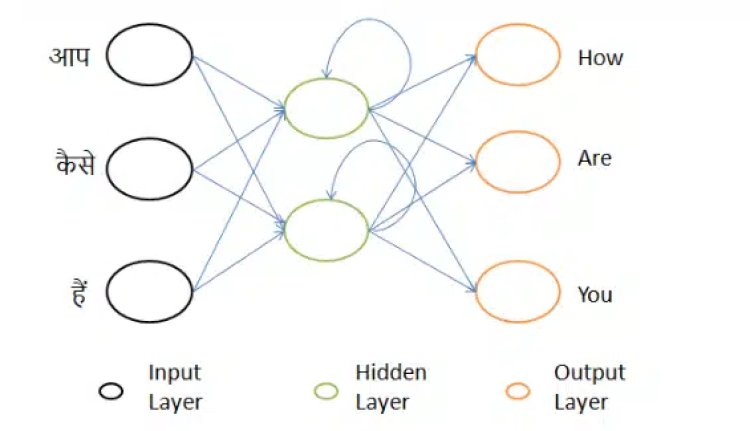

A Recurrent Neural Network, abbreviated as RNN is a type of Neural Network. RNN is mainly used for NLP, sequence models, or time series data. The major difference between RNN and Artificial Neural Networks is that RNN can memorize past information which is very useful for sequence models and time series.

when the translation of sentences from one language to another involves each subsequent data point being dependent on the prior data point. In this instance of translation, words’ meanings frequently alter in accordance with changes in the sentence’s structure. If the vocabulary for an NLP task is 20,000 words, then processing vectors of that size by an artificial neural network would require too much computation.

Let’s have a look at HOW RNN WORKS!

Mechanism of RNN

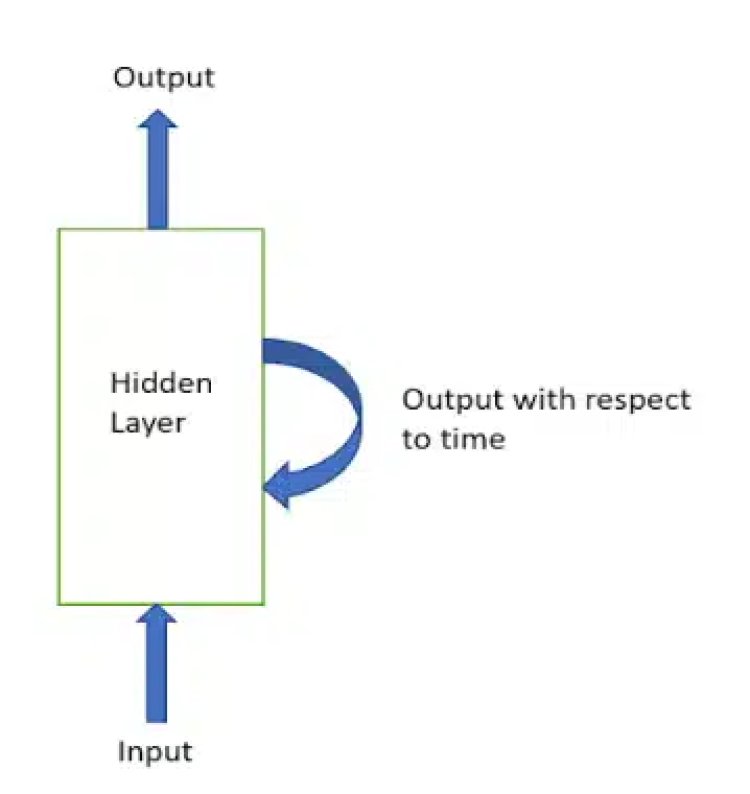

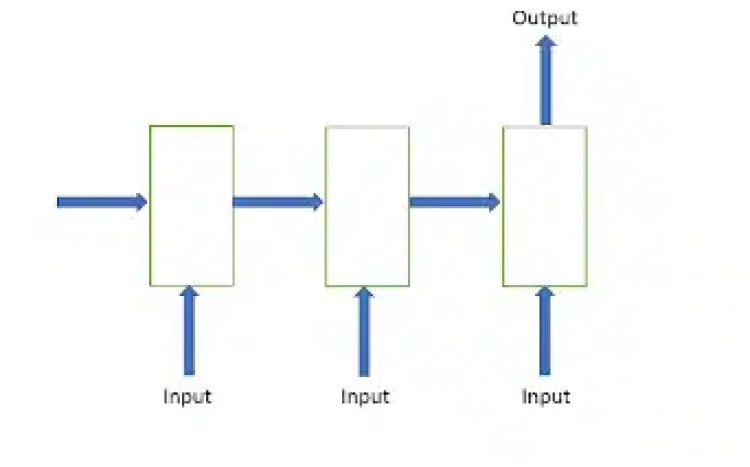

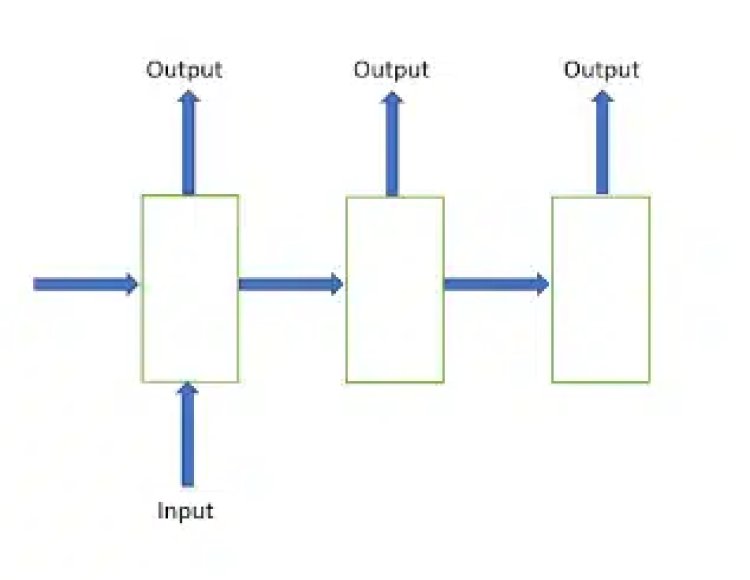

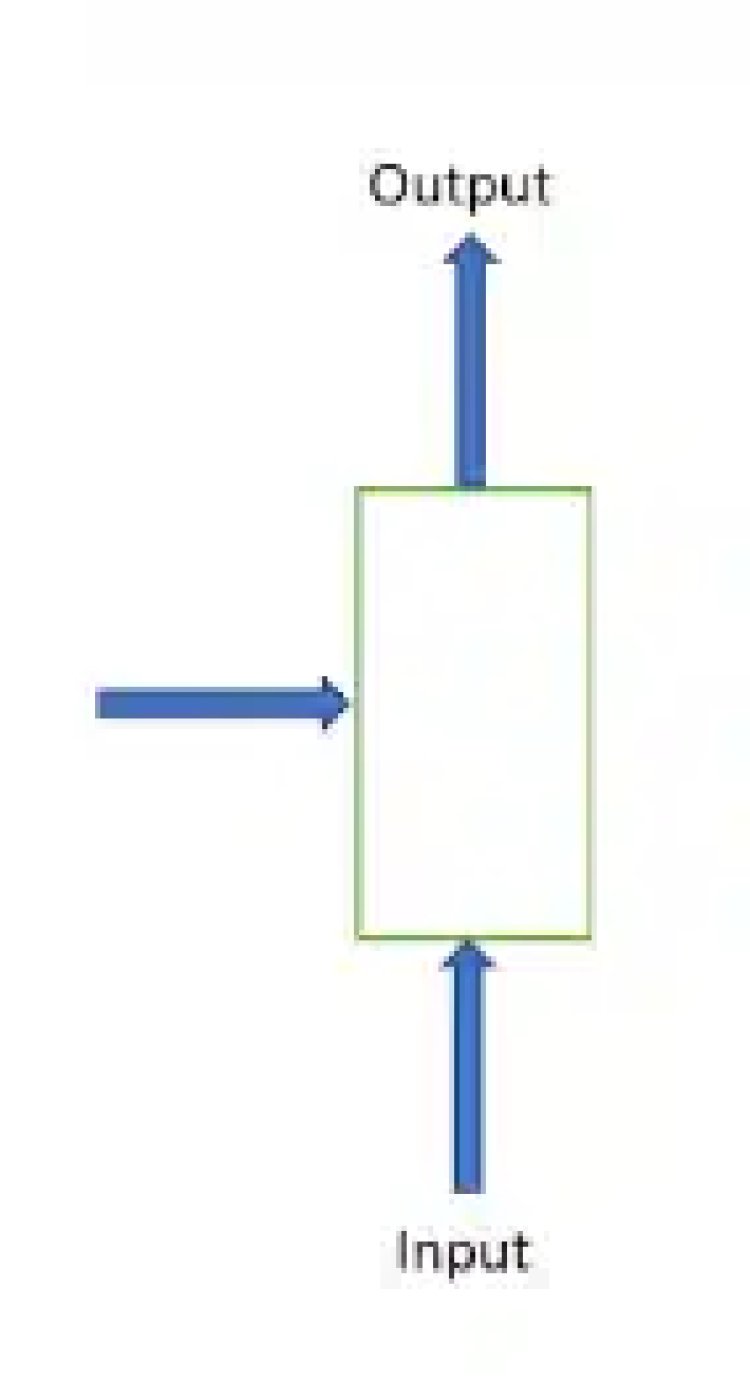

In RNN to build relations between data points, the output of one recurrent layer is fed as input to the next recurrent layer along with the input.

In recurrent neural networks, recurrent layers are kept in a feedback loop as shown in the above figure.

For example, we want to translate one sentence from Hindi to English

input=आप कैसे हैं

output=How are you

Here, the sequence of words is important. RNN remembers this sequence by doing a feedback loop of output.

In Artificial Neural Network forward propagation and backward propagation, we calculate output and weights and bias updation. RNN also works in forward propagation and backward propagation.

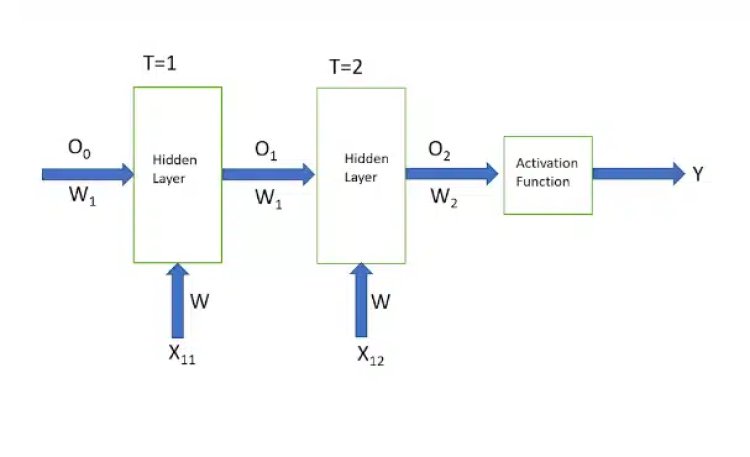

Below is a simple representation of RNN with 2 Recurrent layers.

Here, X is the input vector with respect to time

Y is predicted Output

Oo is Initialized value at time is 0

O1 is Output at a time is 1

O2 is Output at a time is 2

T represents the time

W is weight

For activation function, we have many choices but more frequently used are as follows:

- Sigmoid

- Softmax

- ReLU

Refer this article to know: Support Vector Machine Algorithm (SVM) – Understanding Kernel Trick

Forward propagation

In forward propagation, it starts with weights initialization, and it is done by assigning values zero or close to zero.

Now we will have to look at the mathematical part of these recurrent layers.

When T=1

O1=f(X11 * W)

When T=2

O2=f(X12W+ O1W1)

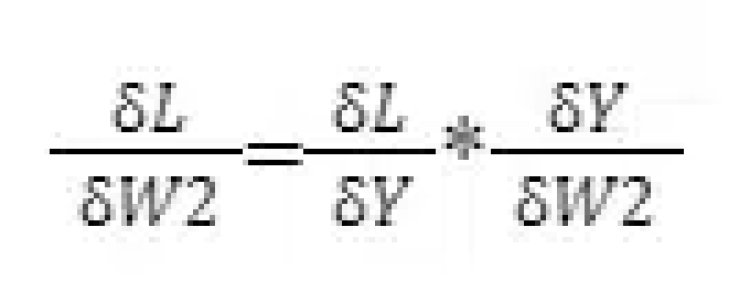

Backward Propagation

Backward propagation‘s main task is to update Weights to reduce loss and error between predicted output and actual output.

This weight update is done by first calculating loss between predicted output and actual output. Then the weights are changed in such a way that the loss we calculated will get reduced.

Here we have to update two weights one which is associated with feedback output and the other is associated with input.

Updation of W2 is as follows

Updated W2 will be,

![]()

Updation of W is as follows

![]()

Updated W will be,

![]()

Read this article to know: A Complete Guide to XGBOOST Algorithm in Python

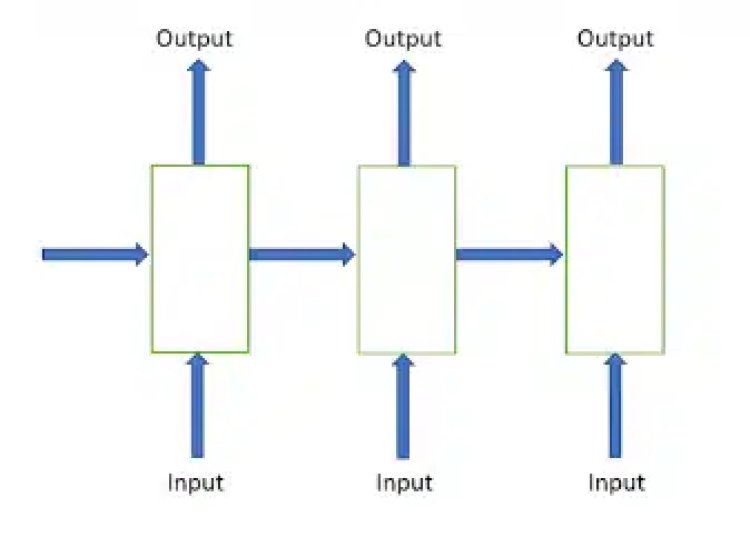

Types of Recurrent Neural Network

Recurrent Neural Network is divided on the basis of the number of Input and Output variables.

Types of RNN are as follows:

1. Many to Many

Eg. Language Translation, Name Entity Recognition

2. Many to One

3. One to Many

Eg. Music production from the seed node.

4. One to One

Eg. Simple task, Image classification.

Also Refer this article: A Complete Guide to Decision Tree Algorithm in Python

Use Cases or Examples

- Language Translation: Translating one language into another with actual meaning kept intact.

- Name Entity Recognition: Recognizing names and entities from sentences.

- Time Series Data: Data associated with the time which has some sequence.

Let’s have a look at Keras’s built-in RNN layer (LSTM)

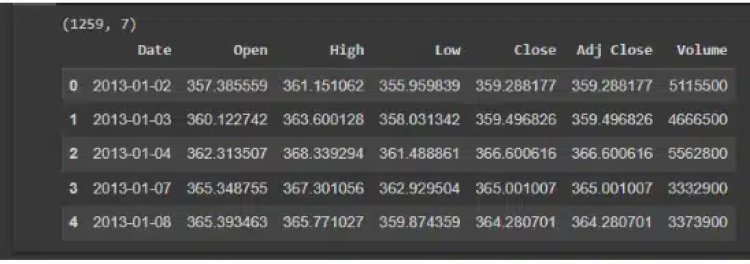

we will start with loading the dataset

import pandas as pd

df=pd.read_csv(‘/content/drive/MyDrive/Colab Notebooks/rnn/trainset.csv’)

print(df.shape)

df.head()

Output:

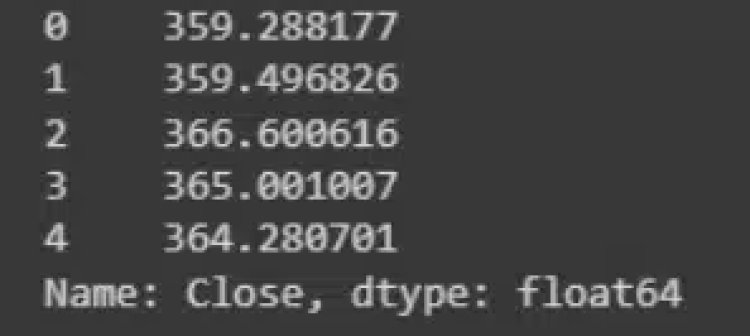

After that, we will normalize the feature and will take the ‘close’ feature for prediction

df_close=df[‘Close’]

df_close.head()

Output:

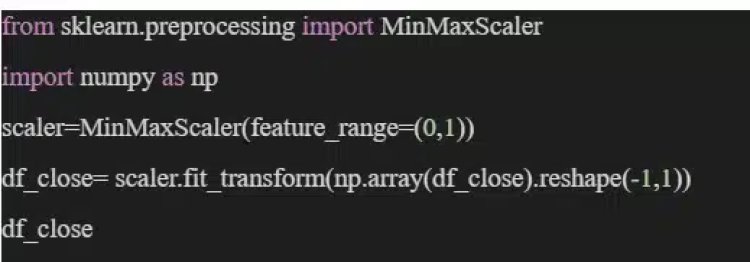

from sklearn.preprocessing import MinMaxScaler

import numpy as np

scaler=MinMaxScaler(feature_range=(0,1))

df_close= scaler.fit_transform(np.array(df_close).reshape(-1,1))

df_close

Output:

Output:

df_close.shape

Output:

(1259, 1)

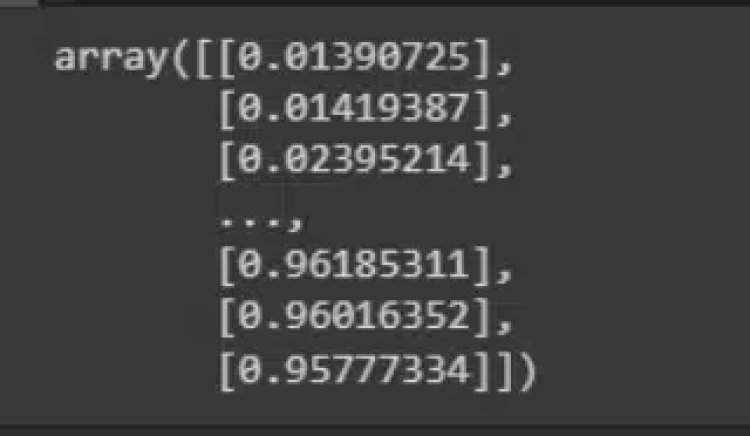

Hereafter we will split the dataset into train and test. 75% of the data will use for training and the rest is for testing

![]()

(843, 100)

(843,)

(None, None)

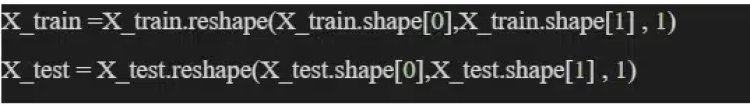

![]()

(213, 100)

(213,)

(None, None)

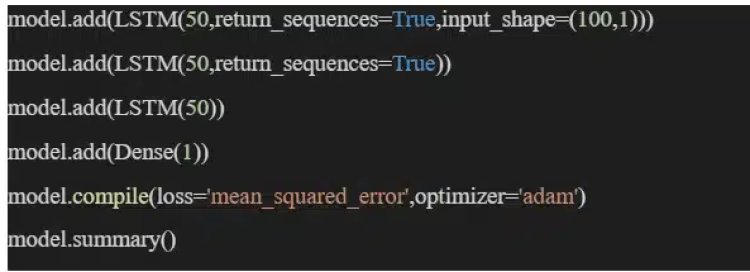

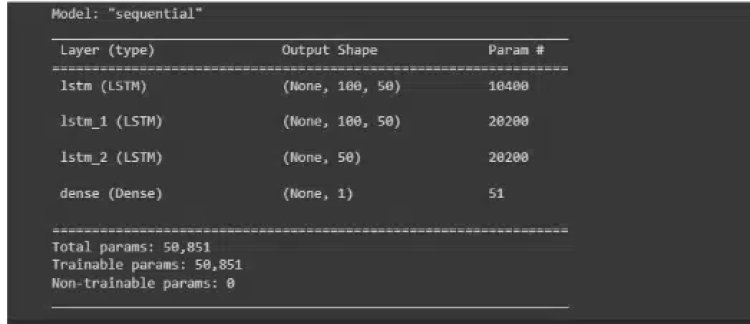

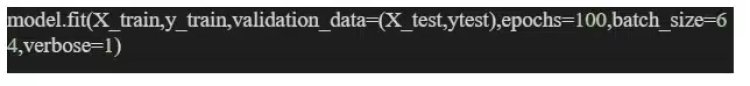

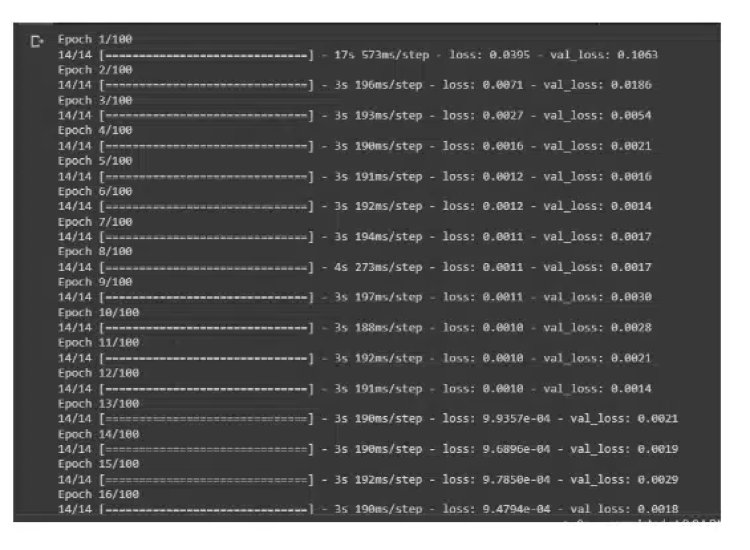

We are going to use Keras Sequential to build the model. And in that model, the LSTM layer is what we are going to add

Output:

What is Neural Network & Types of Neural Network

Pros and Cons of RNN

Pros:

- RNN works with sequential data.

- RNN can ‘memorize’ previous data points.

- RNN can work with flexible input and output variables.

Cons:

- Vanishing Gradient: When we are doing more iterations while updating weights after some iterations weights come close to zero.

- Slow Computation

What is One Hot Encoding

Variations of RNN

There are different variations of RNN architecture, below are the two majorly used variations:

- Bidirectional Recurrent Neural Network (BRNN)

In BRNN accuracy is improved by sharing the next data point with the current one.

- Long Short Term Memory (LSTM)

LSTM is majorly designed to deal with vanishing gradient descent problems

Conclusion:

So when the sequence of the data is increasingly crucial, many business choices are made using the recurrent Neural Network Algorithm. These choices enable predictive modeling to forecast outcomes that have a connection to historical data. Recurrent neural networks are employed in a variety of domains, including time series, etc.

Being a prominent data science institute, DataMites provides specialized training in topics including machine learning, deep learning, Python course, the internet of things. Our artificial intelligence at DataMites have been authorized by the International Association for Business Analytics Certification (IABAC), a body with a strong reputation and high appreciation in the analytics field.

OBJECTIVE FUNCTION